On Touch Support for Drag and Drop Interfaces

The range of different devices used to access the web means we can never control how people will experience what we create – not only in terms of screen size, but also how they interact – touch or click?

As well creating flowing layouts that adapt to smaller screen sizes, touch interactions can’t be an afterthought either – they’re as essential as mouse and keyboard input:

Web developers and designers have smartly decided to simply embrace all forms of input: touch, mouse, and keyboard for starters. While this approach certainly acknowledges the uncertainty of the Web, I wonder how sustainable it is when voice, 3D gestures, biometrics, device motion, and more are factored in.

To continue from my previous post on building a drag and drop UI, this article studies the differences in experience between touch and mouse input, especially (but not exclusively) for drag and drop interactions. It’ll cover:

UI states for touch vs mouse

Implementing touch interactions with the Web API

Embracing alternative input devices and accessibility

Everything in this article comes from my experience making a real app. As well as the theory, it’s how I’ve feasibly implemented things.

Mouse Vs Finger

Mouse pointers are most widely used on laptops and desktop devices, whereas touch interfaces are common on both phones and tablets. However, touch isn’t just limited to smaller screens – tablets can scale up and beyond the size of desktop monitors, as highlighted by Josh Clark:

When any desktop machine could have a touch interface, we have to proceed as if they all do.

Josh Clark, uie.com

Therefore, even if we’re designing for desktop, it’s still important to consider touch interactions. Here’s a summary of the main differences between mouse and finger, adapted from Jakob Nielson:

MouseFingerPrecisionHighLowPoints1 Mouse cursor1 usually, 2-3 possibleVisible PointerYesNoObscures ViewNoYesSignal States3: Hover, Mouse-down, mouse-up2, Touch-down, touch-upGesturesNoYes

See the full table here. The table shows many differences, but depending on the context you’re designing for, some will have more impact than others.

In my case of building a drag and drop interaction, these are the main changes I had to consider when adding touch support:

Fingers obscure the view

As seen above, a mouse cursor easily fits the touchpoint, whereas it can’t be seen under a finger. That’s because a finger has a larger surface area than a mouse pointer, and there’s also no visible mouse pointer with fingers obscuring the view of the screen.

Touch targets need to be big enough for people’s fingers. Apple’s Human Inteface Guidelines suggested a minimum target size of 44 pixels by 44 pixels, and as highlighted by UX Mag the magic number for a touch target is 10mm:

For this reason, it’s important to consider the size of your touch target, and if it makes sense for the context of your app. You can do this by observing how people use your app.

After watching someone struggle to drag and drop a component using the drag handle, I tried changing the touchable surface to the entire component. It worked, but also brings more questions:

How do you disable/enable the touch surface?

Should the touch surface be the entire element?

It’s also important to give some form of feedback that can indicate a draggable item is selected. Above, the draggable target has been rotated slightly when active, but for smaller elements like buttons, the size can be increased underneath the finger. Sort of like this (but yeah, less fancy):

This would not only simulate elevation, but improves usability as the element remains visible around the edge of your finger.

Touch vs Click

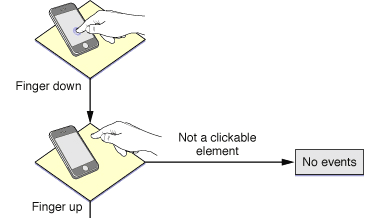

Apart from surface area, it might seem that there isn’t much difference between finger touch events and mouse click events, especially for ‘one-finger’ gestures. For instance, a mouse click is comparable to the finger-down, finger-up interaction with a single fingertip:

However, differences arise with more complex interactions, such as moving items around a page, or performing a drag and drop. This is because multiple interactions can be triggered at the same time when using a touch interface, that wouldn’t normally happen with a mouse. Consider the difference between dragging and scrolling when using a finger:

Drag vs. Scroll

Let’s first consider a scroll event. A key distinction when navigating a page using touch input is that there’s no scroll wheel, so the finger does all the work:

Finger down: First, the user has to touch the screen to scroll.

Finger move: Finger movement is then used to move the page.

At this point, you might think that the same interaction (finger down, finger move) is used to perform a drag and drop. And you’d be right – but the subtle difference of having no scroll wheel can become a large obstacle for drag and drop interactions. For instance, upon first contact with the screen, how do you know whether the user intends to scroll the page, or select and pick up a draggable element?

Both interactions look almost identical:

👉 Drag and Drop: Finger down to select element > finger move to drag the element > finger stop and finger up to drop the element

📜 Scroll: Finger down > Finger move to scroll > Finger stop to stop scrolling

This means both ‘drag’ and ‘scroll’ events can occur at the same time, which makes a successful drag and drop impossible. Since the page is always scrolling, the position of the draggable item is prevented from changing on the vertical axis.

Therefore, we need a way to distinguish between a scroll and a drag. The solution that worked for me is detecting touch and hold:

Touch + Hold != Scroll

A touch and hold gesture can be pictured like this flow diagram from the Safari Web Content Guide:

The length of time a finger is held down in one position on the screen can help detect when the intention is to select an element, as opposed to scroll the page.

Another important consideration is that you must distinguish among a tap, a swiping gesture (such as scrolling), and an intentional “grab” by using a timing delay of a few milliseconds, and providing clear feedback that the object has been grabbed.

Let’s see how using touch duration can prevent the page scrolling when we want to perform a drag and drop interaction:

Using Touch Duration to Determine Actions

When determining if a user wants to scroll or select an element, a key contrast between touch and mouse is that a mouse click immediately makes an element active, whereas a touch oftentimes incurs a delay:

When a user taps on an element in a web page on a mobile device, pages that haven’t been designed for mobile interaction have a delay of at least 300 milliseconds between the touchstart event and the processing of mouse events (mousedown)

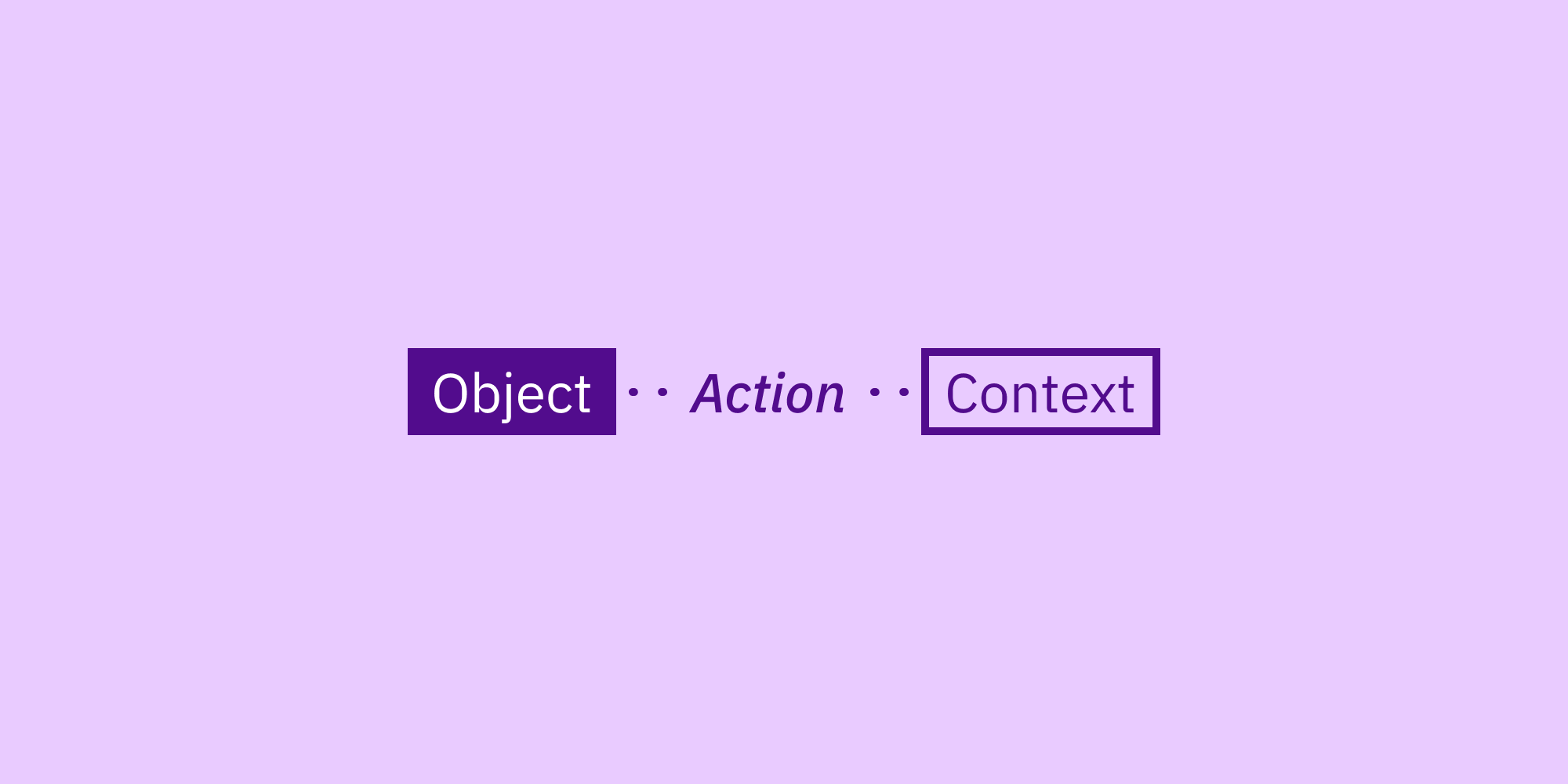

Therefore, type of interaction a user wants to perform can be determined fairly accurately using touch duration:

Tap: If the touch duration is less than 1 second, we can consider it the equivalent of a mouse click

Selection: If touch duration is longer than 1 second, and in the same place, we can guess something is being selected

Scroll: If a swipe gesture is detected, we can assume the user intends to scroll an element or the page.

As an example, if we again consider a drag and drop event on a touch screen, it’s clear that detecting touch duration becomes very useful. A finger-down event can trigger both a scroll, or activate an element at the same time, so adding timing to the equation can add another signal.

Press vs. Click and the Web API

If your mind isn’t already boggled enough, read on for the difference between a press, and a click. As we’ve covered, a mouse click and a touch event is different – and therefore, engineers need a way to distinguish between the 2 when building an app.

Luckily, browser technology comes to the rescue. A lot of it is taken care of for us if we leverage the right Web API events. Even for non-technical folk, it’s useful to be aware of these to understand different possibilities of touch input:

Event NameDescriptiontouchstartTriggers when the user makes contact with the touch surface and creates a touch point inside the element the event is bound to.touchmoveTriggers when the user moves the touch point across the touch surface.touchendTriggers when the user removes a touch point from the surface. It fires regardless of whether the touch point is removed while inside the bound-to element, or outside, such as if the user’s finger slides out of the element first or even off the edge of the screen.touchenterTriggers when the touch point enters the bound-to element. This event does not bubble.touchleaveTriggers when the touch point leaves the bound-to element. This event does not bubble.touchcancelTriggers when the touch point no longer registers on the touch surface. This can occur if the user has moved the touch point outside the browser UI or into a plugin, for example, or if an alert modal pops up.

In my case of building a drag and drop interface, these ‘touch detectors’ helped solve the issue in the previous section: what’s a swipe and what’s a press?

I won’t go into it, but here is an example code to distinguish between touch and mouse events from my app, Letter. You can see there are attributes for onMouse events, and also for onTouch events:

<div className="draggable-item">

<div

onMouseDown={this.mouseDown}

onMouseUp={this.mouseUp}

onTouchEnd={this.touchEnd}

onTouchStart={this.touchStart}

>

{/* Drag and drop element content here */}

</div>

</div>

Additional Device Feedback, Voice Interactions, and More Input Devices

Above we’ve gone through some of the big differences between touch and mouse interaction across devices, but you can keep going further, such as by leveraging what the device hardware has to offer.

For example, NNGroup highlight the use of haptics to provide better feedback for drag and drop interactions. Many phones have haptic feedback for touch interaction, as do some trackpad input devices:

A haptic “bump” can indicate that an object has been grabbed, and another one can indicate that an object has been dragged over a drop zone.

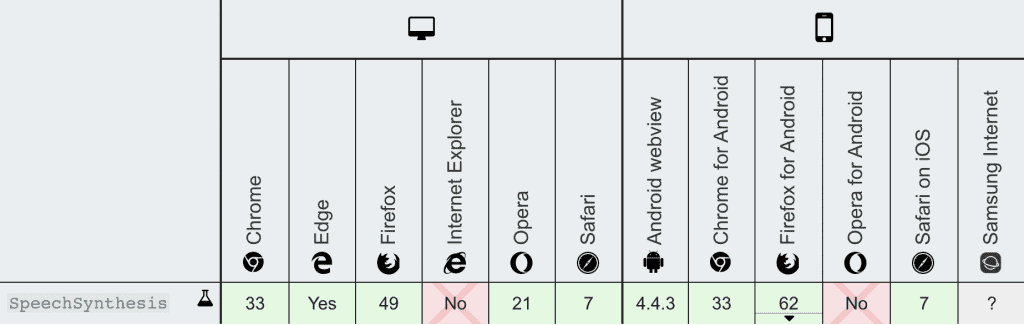

Furthermore, voice interactions are also making their way to web browsers, with the support for Speech Synthesis already available on the most popular ones:

Speech Recognition for actual input is a bit further behind synthesis, but it’s coming too. And all of these can be considered, even with drag and drop, as we’ve previously seen:

So in all, as well designing and building responsively for different screen sizes, we must also be aware of the different methods of user inputs, as well as making interfaces accessible to people of different abilities.

Buy me a coffee

Buy me a coffee