This post will show you how to set up caching for GraphQL and Rest on Strapi 5, using the Strapi-Cache plugin and Redis. First, here's a bit of context and why I needed it:

The Need for Caching

Even if your site is cached by Cloudflare or something different, you still may need caching for the Strapi API. One example I have is during site builds.

Prototypr frontend is a Next.js app, and the backend is Strapi CMS. The site is deployed statically so all the HTML is generated on the server so the load time is instant for end users.

However, because there's 1000s of pages on the site, it takes about 30 minutes to build all those pages and deploy. This deployment process overloads Strapi with requests as it doesn't hit Cloudflare - it's directly making thousands of API calls, making Strapi really slow for end users during deployment time!

Because I deploy often, I need a way to do it without slowing down the site for everyone, so I came across caching as a solution, and in particular, this Strapi plugin called Strapi-cache by TupiC:

Introducing Strapi-Cache Plugin

Strapi-cache handles both Rest API and GraphQL caching, which is great. It also comes with purging all ready to go, so you don't need to modify lifecycles to clear your cache. It already hooks into relevant hooks such as updating a post:

The features listed are:

Cache Invalidation: Automatically invalidate cache on content updates, deletions, or creations.

Purge Cache Button: UI option in the Strapi admin panel to manually purge the cache for content-types.

Route/Content-Type Specific Caching: Users can define which routes should be cached based.

Switchable Cache Providers: Support for caching providers like Redis

Installing and Customising Strapi-Cache

Installing strapi-cache is the same as any other Strapi plugin with NPM install:

npm install strapi-cache --saveThe configuration is the important part. You have to manually activate the plugin by adding it to your config/plugins.js. The docs demonstrates with default settings:

// config/plugins.{js,ts}

'strapi-cache': {

enabled: true,

config: {

debug: false, // Enable debug logs

max: 1000, // Maximum number of items in the cache (only for memory cache)

ttl: 1000 * 60 * 60, // Time to live for cache items (1 hour)

size: 1024 * 1024 * 1024, // Maximum size of the cache (1 GB) (only for memory cache)

allowStale: false, // Allow stale cache items (only for memory cache)

cacheableRoutes: ['/api/products', '/api/categories'], // Caches routes which start with these paths (if empty array, all '/api' routes are cached)

provider: 'memory', // Cache provider ('memory' or 'redis')

redisUrl: env('REDIS_URL', 'redis://localhost:6379'), // Redis URL (if using Redis)

},

}Go with the defaults?

Inspecting the settings, I wondered which of the defaults need changing.

For example, I thought max (the maximum number of items in memory cache) being set at 1000 seems low for the Prototypr site with 1000s of posts. However, I later found the memory cache uses up RAM, which can therefore slow the site down if it's not capped.

Now what about the cache provider - memory or Redis?

Memory Cache or Redis?

If you're using memory cache (provider: 'memory'), the memory provider uses RAM, not hard disk space, meaning:

It's very fast since it stores everything in system memory.

However, it's limited by how much RAM your server has — if you store too much, it can cause memory pressure or even crash the Node.js process.

For that reason, it sounds like a good idea to use the Redis option to me.

I want to store as much as possible in the cache until it's cleared by something like a post update event. Keeping this independent from my Strapi service seems a good approach.

On the other hand, this may not be true, since both my Redis server and Strapi application are running on the same server, therefore using the same memory. Anyhow, for the sake of keeping them separate, here's how to set it up with Redis:

Installing Redis on Coolify

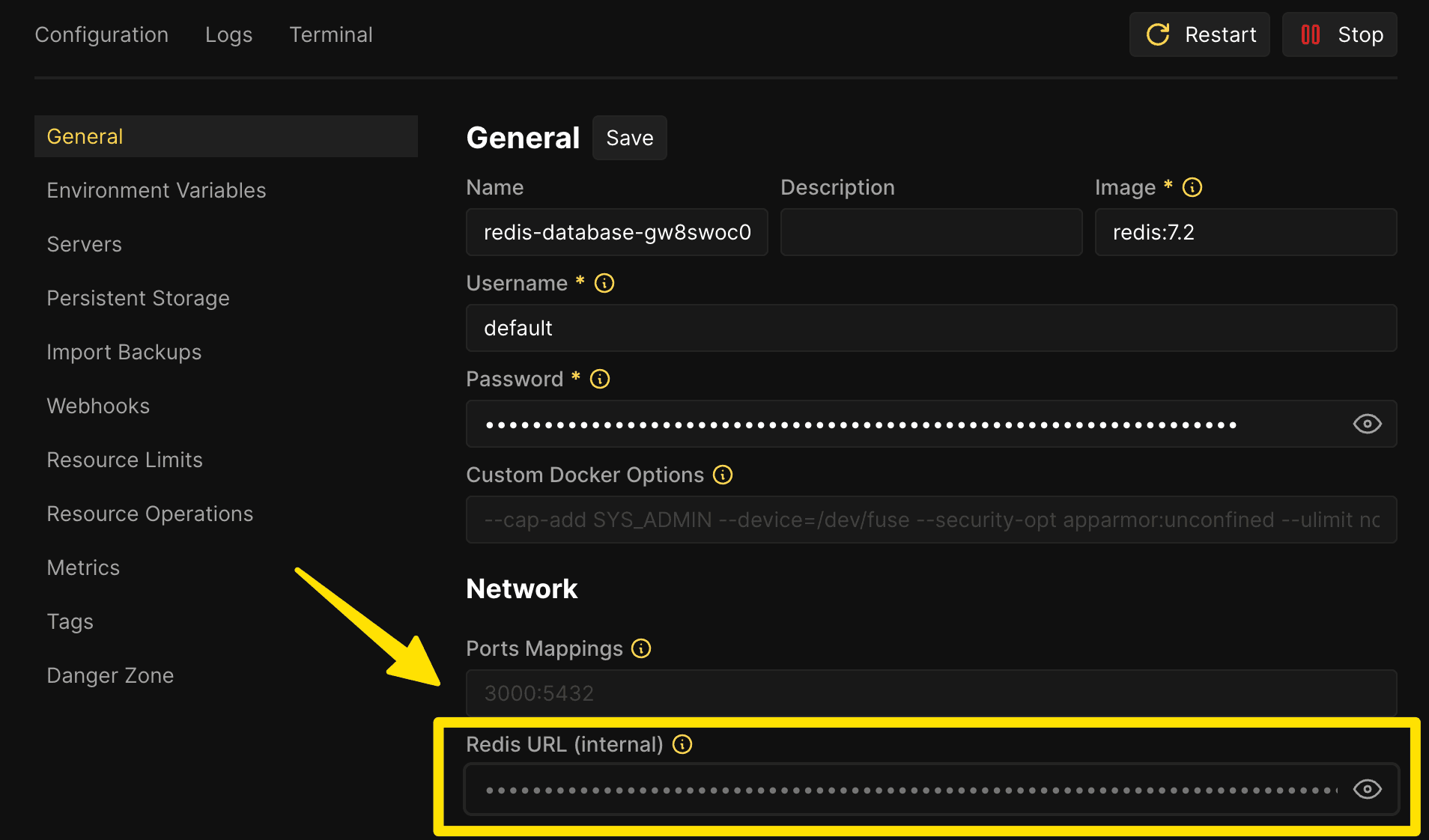

Go to your Coolify dashboard.

Click + New Resource → look for Databases → select Redis.

Find Redis in Coolify Click start to deploy the Redis service.

Once it's started, get the Redis connection Url:

It's something like:

redis://default:[email protected]:6379Copy this and use it as your REDIS_URL for the Strapi-Cache plugin.

Using the plugin

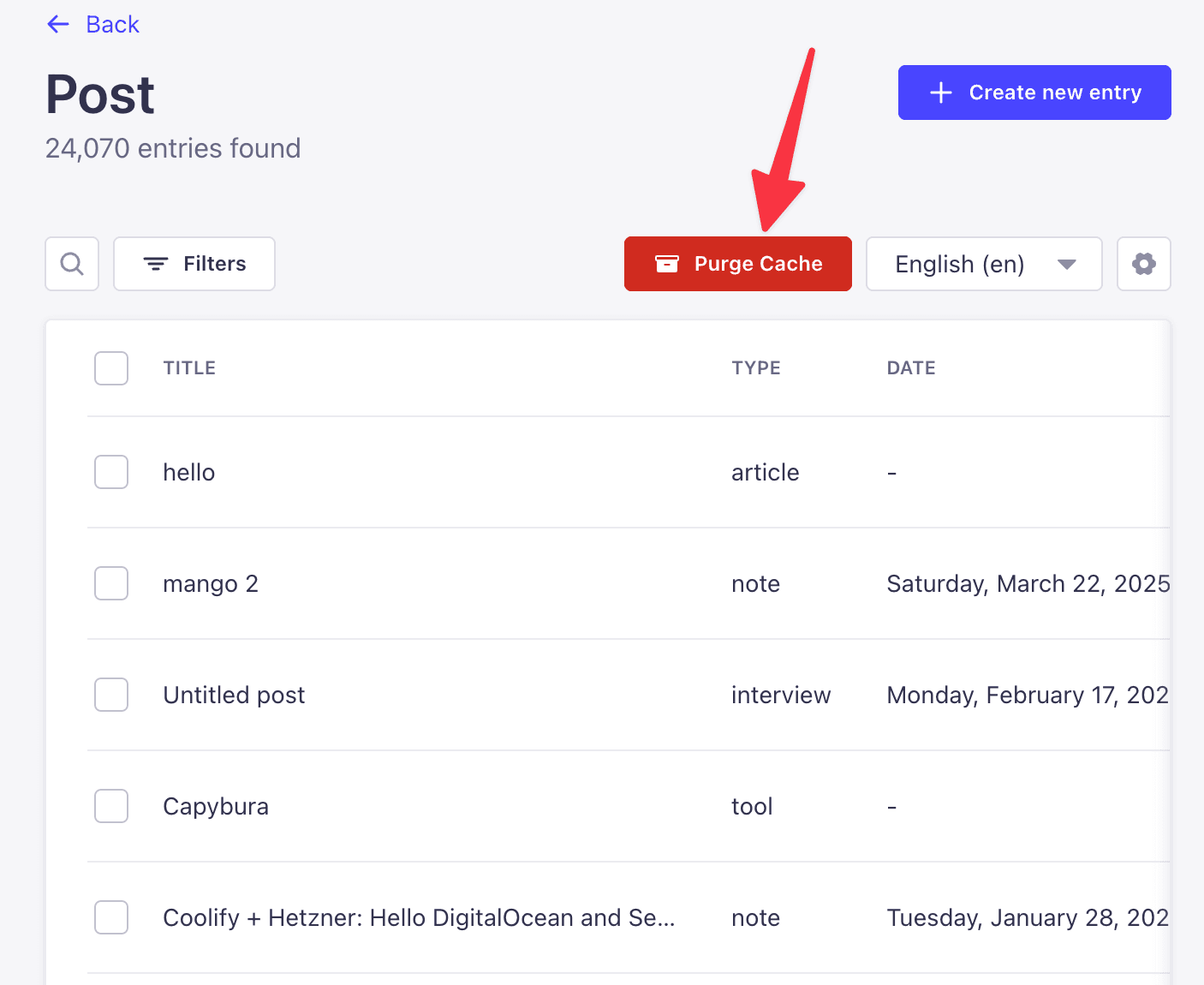

After installing the Strapi-Cache plugin, you know it's installed successfully because it'll be listed in your plugins list at:

/admin/settings/list-pluginsAlso, you'll see the 'purge cache' button on your content types:

Is it Caching?

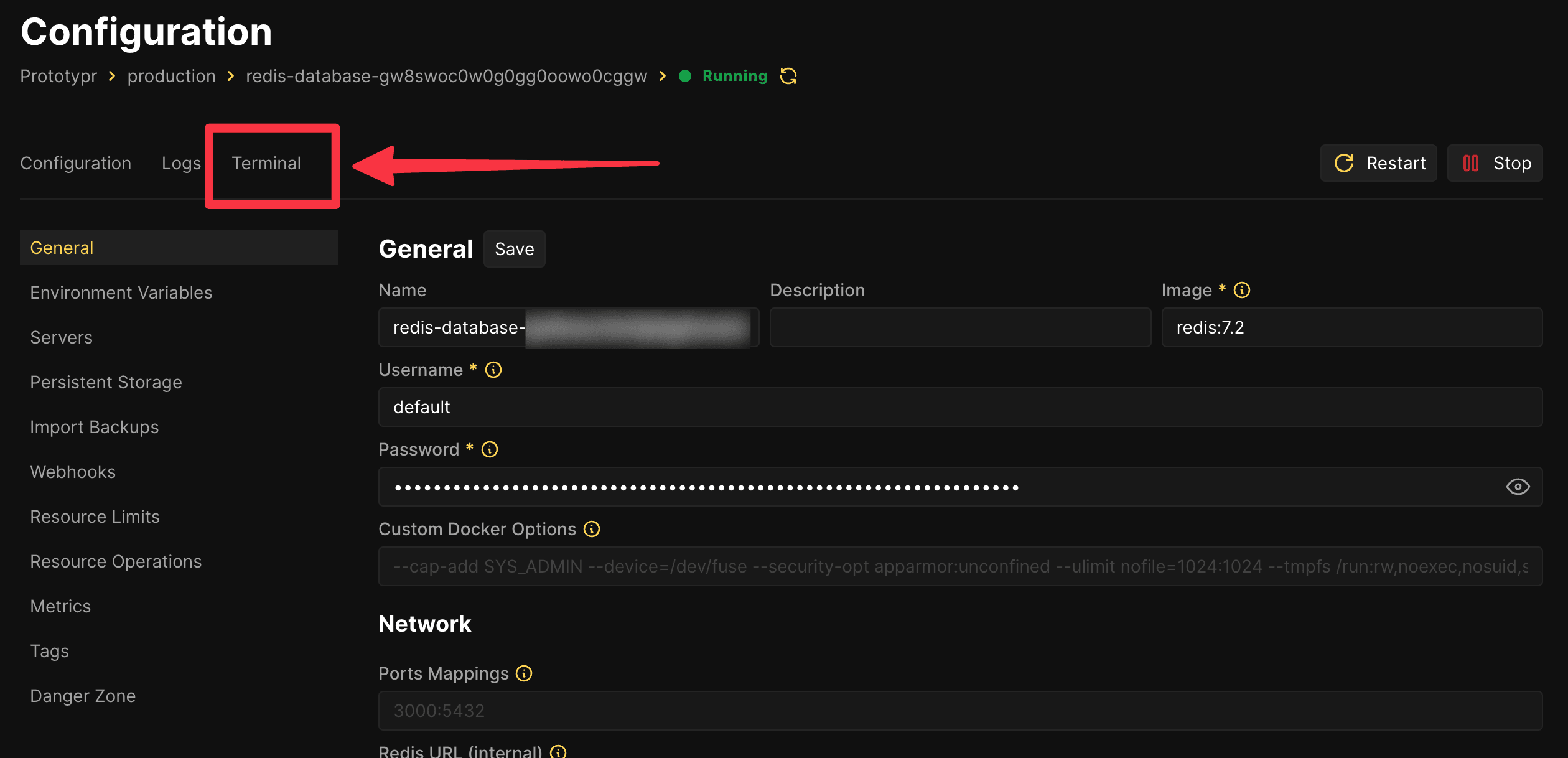

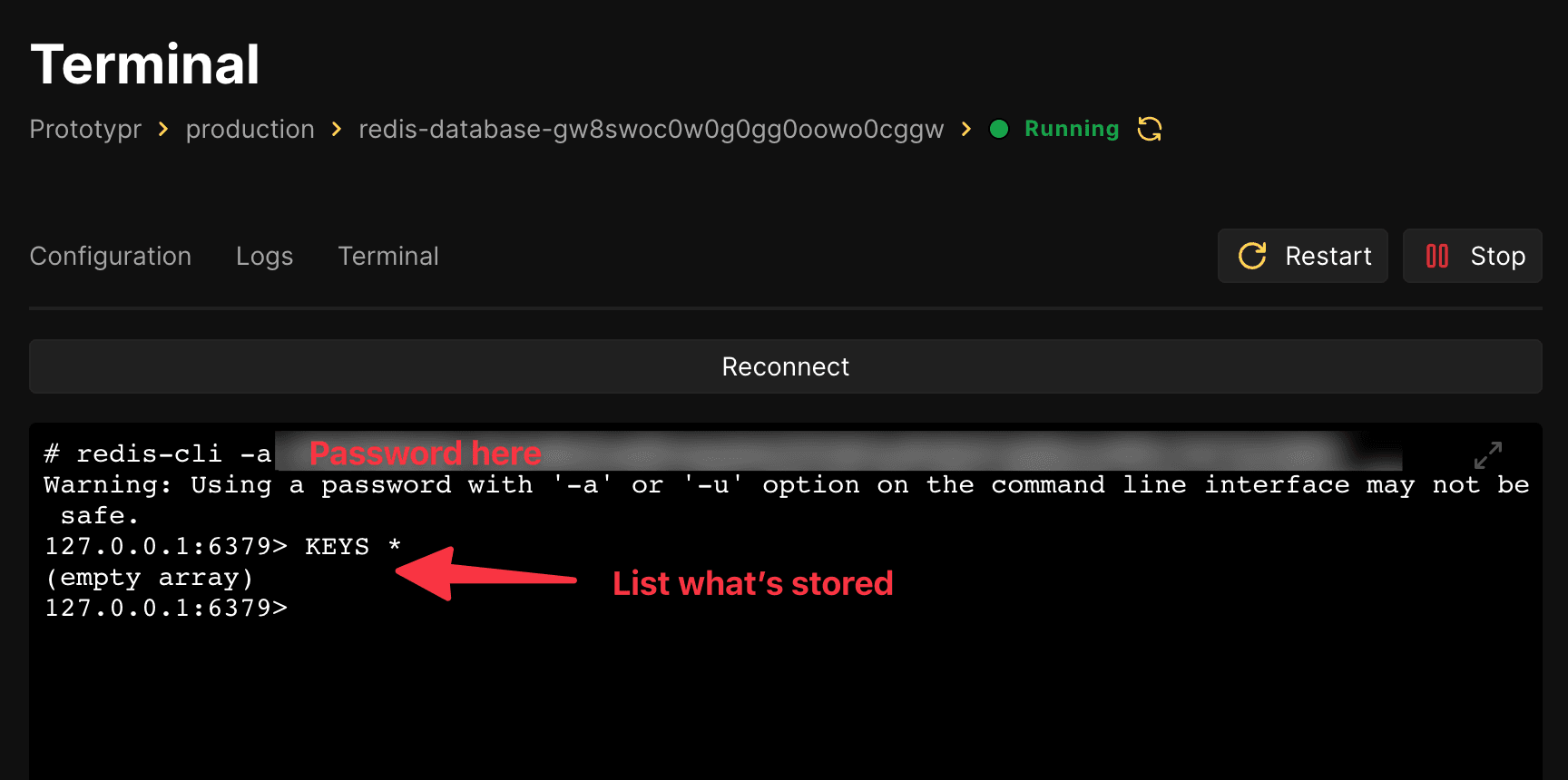

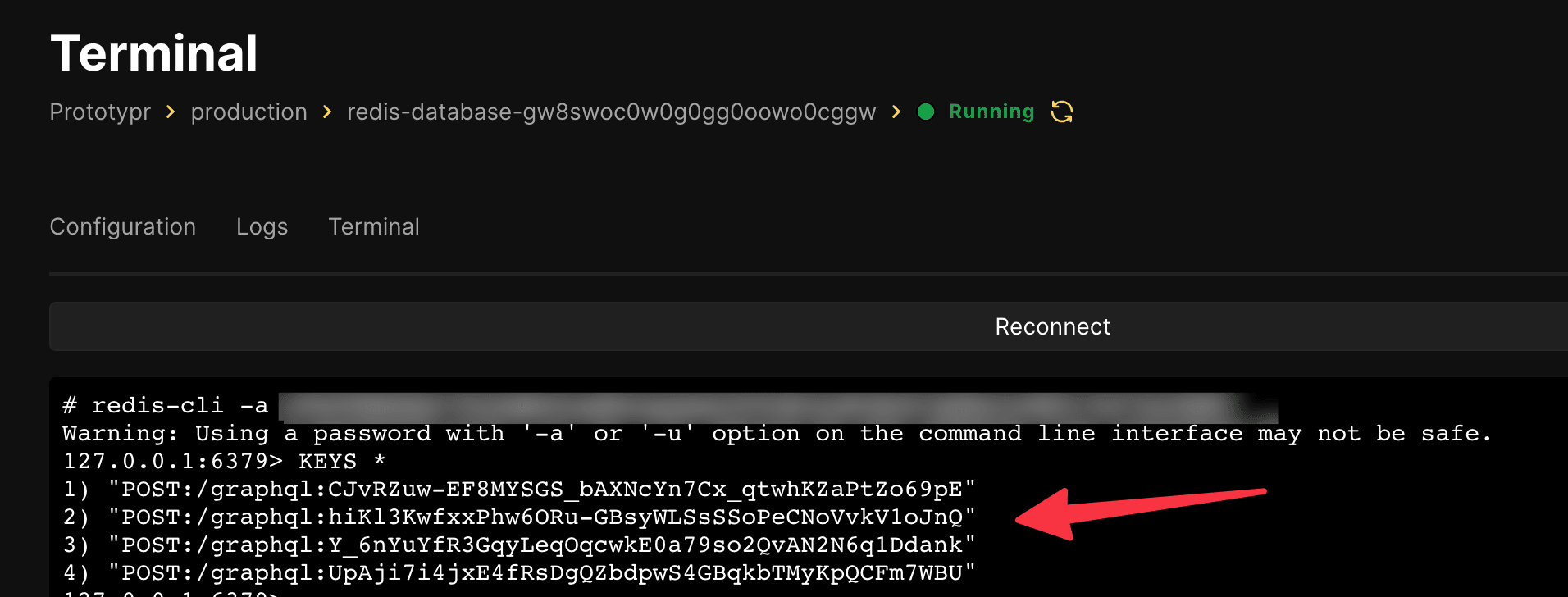

To see if your redis is working, in Coolify you can access the terminal and open the Redis service:

Once in the Terminal, start the redis-cli with your password from the configuration page.

Then running KEYS * will show if the cache has stored anything:

Connecting Redis to Strapi on Coolify

Essentially, if you have set up the config as outlined in the strapi-cache plugin, you should just need to put your Redis connection URL into your Strapi site's env variable REDIS_URL.

This works if both Strapi and Redis containers are on the same Coolify server. That makes the part of the same internal Docker network, so you don't need to set up any external IP or custom port mapping.

That's all I did, and I can see right away that my cache is working:

Here is my final config for the plugin settings:

'strapi-cache': {

enabled: true,

config: {

debug: true, // Enable debug logs

max: 1500, // Maximum number of items in the cache (only for memory cache)

ttl: 1000 * 60 * 60, // Time to live for cache items (1 hour)

size: 1024 * 1024 * 1024, // Maximum size of the cache (1 GB) (only for memory cache)

allowStale: false, // Allow stale cache items (only for memory cache)

cacheableRoutes: ['/api/posts'], // Caches routes which start with these paths (if empty array, all '/api' routes are cached)

provider: env("NODE_ENV") === "development" ? 'memory' : 'redis', // Cache provider ('memory' or 'redis')

redisUrl: env('REDIS_URL', 'redis://localhost:6379'), // Redis URL (if using Redis)

},

},You can see for provider, I only use Redis when running in production, so I don't need to run a Redis server in local development:

provider: env("NODE_ENV") === "development" ? 'memory' : 'redis'I also only cache my posts route, as that was the route with my main issue as it has the most pages:

cacheableRoutes: ['/api/posts']That's it, now you have caching + Redis. If you have any improvements or questions, let me know!

Buy me a coffee

Buy me a coffee