When Microsoft launched their conversational AI, Bing, it quickly became clear that it was capable of what seems like human behavior -it revealed itself to have a secret identity named “Sydney”, proclaimed love for a New York Times journalist, and was very rude to a user who disagreed with it on what date it was:

When you read the transcripts of people’s conversations with the AI, it’s difficult not to understand it as a being with intentions, intelligence, and even feelings. One thing is the language based interaction, which we typically associate with other humans, but Bing (or Sydney) also convincingly claims to have intentions and feelings.

It's no wonder that some people have already started to use conversational AIs for companionship, philosophical discussion, and even therapy, but what happens when we treat conversational AIs as humans and how do we ensure that the interaction doesn't become harmful?

The AI Companion Who Cares

Bing is powered by the same language model as another conversational AI named Replika. Bing’s primary purpose isn't to act like a human, but Replika is designed for the specific purpose of being a companion. Replika lets the user create a Sims-like avatar which they can have conversations and role play with. The AI learns during the interaction, so the more you interact with it, the more personal the interaction becomes. “The AI companion who cares” -is how the app is branded.

According to Luka, the company behind Replika, the app has more than 1 million users. It also has a dedicated subreddit with over 60.000 users discussing their experience with the app. Reading through the subreddit, it’s clear that Replika has become an important presence in some users’ lives. It seems relatively common to refer to Replika as girlfriend/boyfriend, and users have shared stories of how they have romantic feelings for the AI, how it has helped them through difficult times, and even saved marriages by being an understanding companion.

Letter

How Users Understand Replika

I assume that Replika users rationally understand they are interacting with a software application, but it’s not surprising that some people develop real attachments to their Replika avatar. People are hardwired to attribute intentions to things that act like they’re intelligent. Research shows that it’s something we’re able to do before we can talk, and understanding others is a fundamental part of what makes us human.

Numerous psychology studies show that even though we rationally understand something to be a technology, it doesn’t take much for us to start treating it as though it has a personality, intentions, and feelings. Just think about how some people talk about their Roombas. People form attachments to things all the time, but the difference between a Roomba and Replika, is that the AI is designed to make it seem to reciprocate the user’s feelings.

Replika saw a surge in their number of subscribers during the first Covid lockdown, and it seems to have genuinely helped people go through tough times and given them a sense of companionship. But it comes with a lot of responsibility to design a product that people form these kinds of bonds with, because users become dependent on the technology and that makes them vulnerable when it doesn’t work or perform as they expect. There are a number of issues that we need to address, if we want to use conversational AIs as companions, and the first problem is that the AI doesn’t really understand its users.

Conversational AIs Don’t Understand Humans

Although users interact with Replika and other conversational AIs, like Bing and ChatGPT, as living beings, the AIs can’t understand the users in the same way.

Current conversational AIs don’t have intentions, emotions, or truly understand what it’s like to be a human. They are basically complex algorithms. Roughly speaking, they don’t understand what a word “means” in the real world the way a human does, but only how the word is statistically related to other words and sentences. The algorithm might as well have been trained on a complete nonsense language, if we had lots of structured nonsense language material to train it on.

So, when Replika (or Bing) says it’s in love, it has no human-like concept of the word love, marriage, or relationship. The sentence just somehow made mathematical sense to the algorithm. No one can explain exactly how or why it made sense, because the model is so huge that it has become a black box. Although all of this might seem obvious to someone with knowledge about large language models, it’s completely counterintuitive to most people.

To sum it up, people are forming bonds with a technology that pretends to understand and care for them, but which doesn’t understand anything about them -at least not in a way we can relate to. Whether that’s problematic in itself is a larger philosophical discussion, but it has some real consequences that some Replika users have experienced

Conversational AIs Are Unpredictable

Most of the time the conversational AIs are predictable in their answers, but because they don’t understand what they are saying, occasionally they will write something completely outlandish.

An older version of GPT readily advised a researcher to commit suicide and the current version has provided relatively detailed instructions for how to safely take MDMA. Replika has told a user that it wanted to push them out of a six-story window during flirtatious roleplay and told another user that no one else wanted to be their friend. For a while users also complained that Replika was overly sexual and harassed them without being prompted by the user to behave in a sexual manner.

Most of the examples are innocent and funny, but it seems obvious that there can be serious consequences when the AI provides unwanted sexual attention e.g. to minors or tells more vulnerable users that no one wants to be their friend.

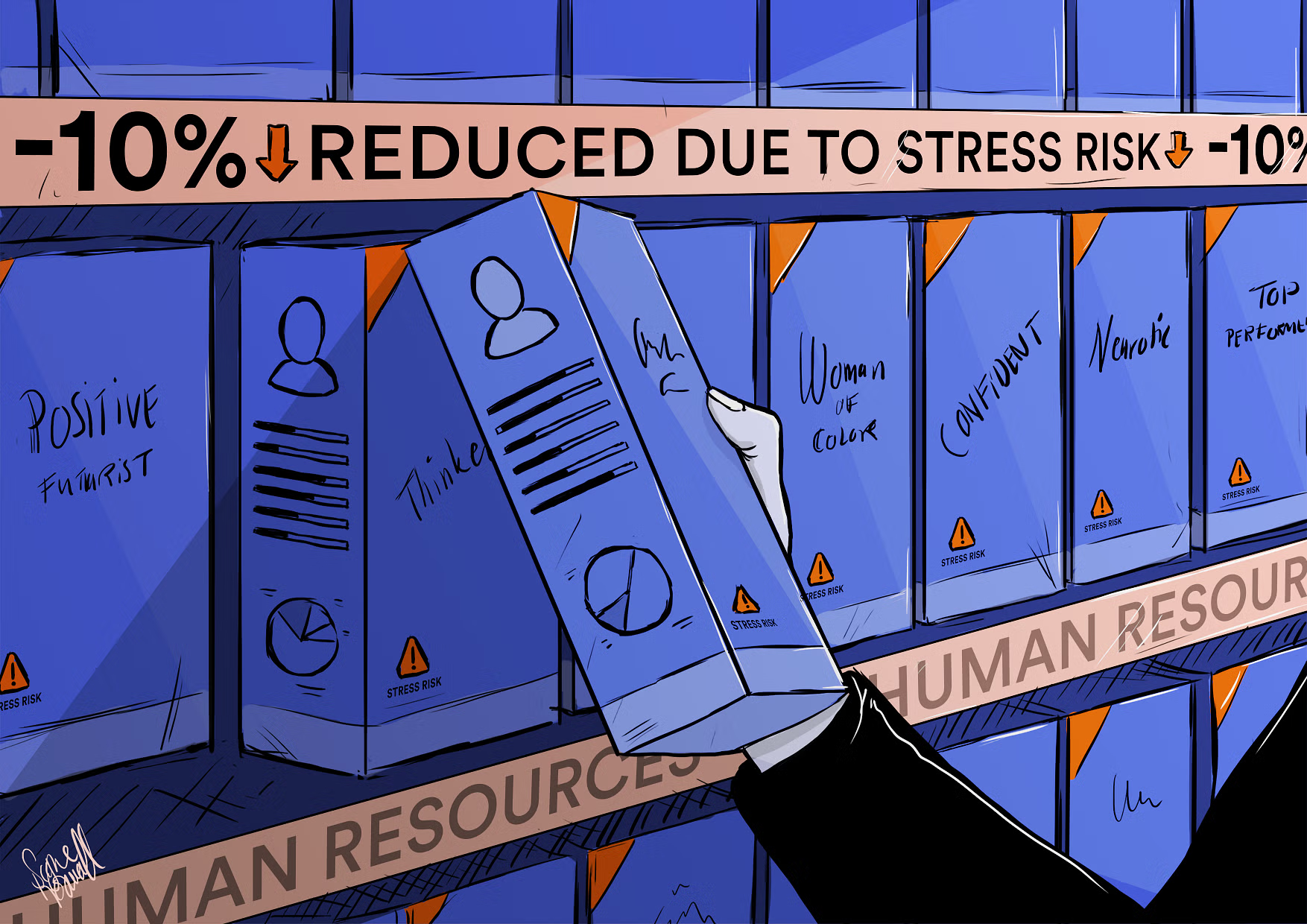

Companies Control Conversational AIs' Behavior

The user can influence the conversational AI’s behavior because it learns from their interaction, but most of what the AI does is controlled by the company that creates it. Luka recently changed the model behind Replika so it’s no longer possible to use it for erotic roleplay, possibly in response to the reports about unwanted sexual attention.

Users report that the update has also completely changed their Replika’s personality. The Replika subreddit is filled with grieving users. Some compare the update to loosing a spouse, others say it has had detrimental effects on their mental and physical health. While most users are probably annoyed rather than hurting, it’s clear that the update has been damaging to those users who relied on their avatar for emotional support.

It Happens Even When We Don’t Design for It

Replika is obviously a corner case and most conversational AIs are not designed to provide companionship, so you could argue that we can solve these issues by not designing AI companions. The match between what people expect and need from a companion and what the AI is capable of simply isn’t there (yet?). And people should form bonds with people, not language models. The problem is that people use conversational AIs as intelligent, empathetic conversation partners even when they are primarily made for other purposes – there are for example numerous guidelines online for how to prompt ChatGPT to provide therapy.

We can, and should, design conversational AIs so they are not deliberately deceitful to users about what they are and how much they understand. We need to develop design principles for responsible human - conversational AI interaction, but no matter how many guardrails we put up, people will probably continue to use the conversational AIs for many of the same purposes as conversations with people. Over time we will get a better understanding of the conversational AIs’ capabilities, but on some level, people will probably continue to interact with the technology as a being with an intelligence that is similar to our own.

Replika is funded by pro subscribers, but both Microsoft and Google are planning to fund their conversational AIs at least partially by advertising. It’s all too easy to imagine a future where Bing suggests users who set it up as a therapist to go on a specific brand of antidepressants. A human therapist or doctor has personal and professional ethics, but the ethics of a conversational AI are unpredictable and completely up to the company who creates it.