"It works fine locally. What's gone wrong?" – the inevitable (and eternal?) dilemma of deployment, as if the actual coding wasn't hard enough (even today with the help of AI)! Software development can be a real maze - solving one issue often unravels another:

Building software is like having a giant house of cards in our brains Idan Gazit on Developer Experience

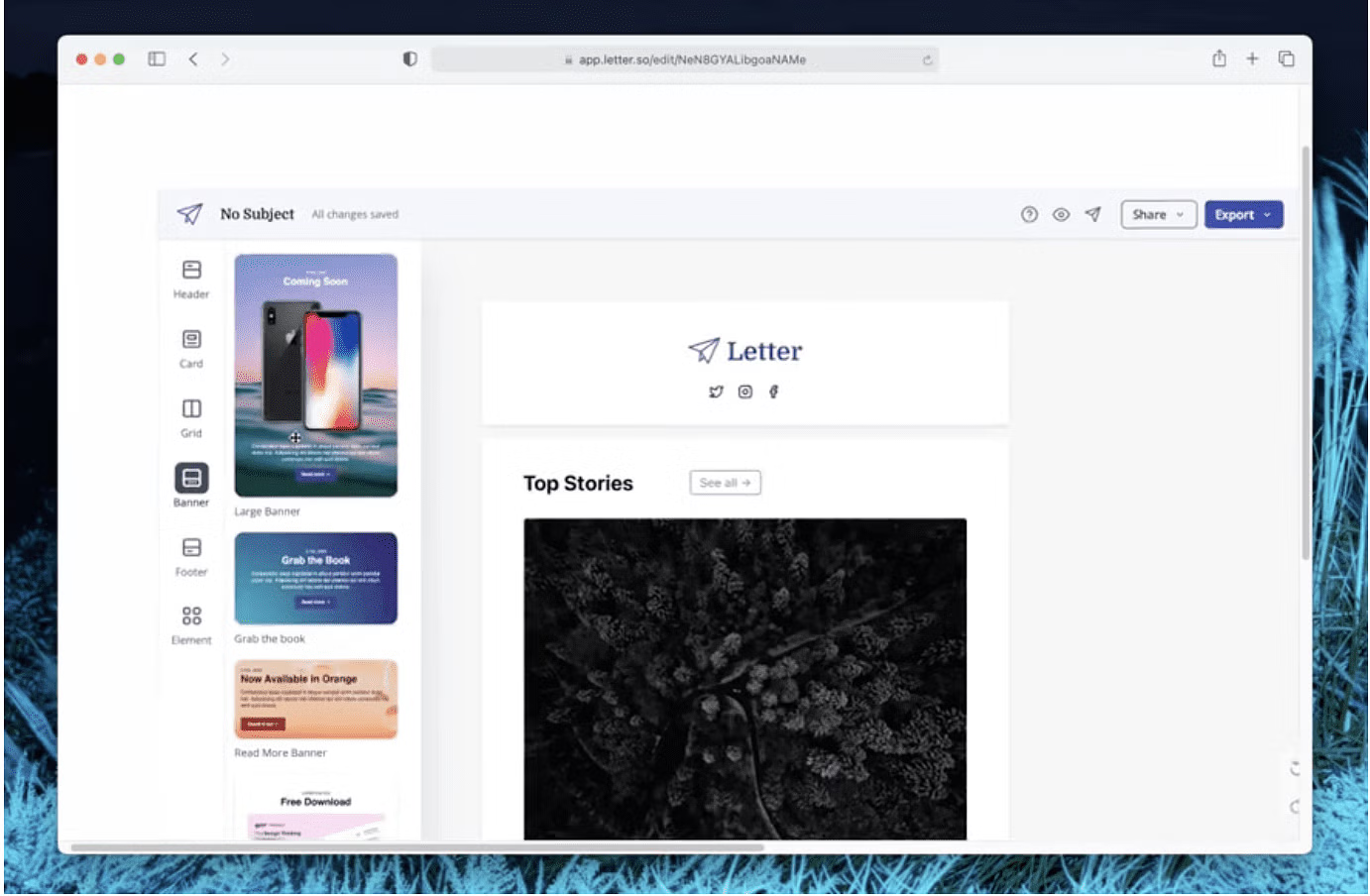

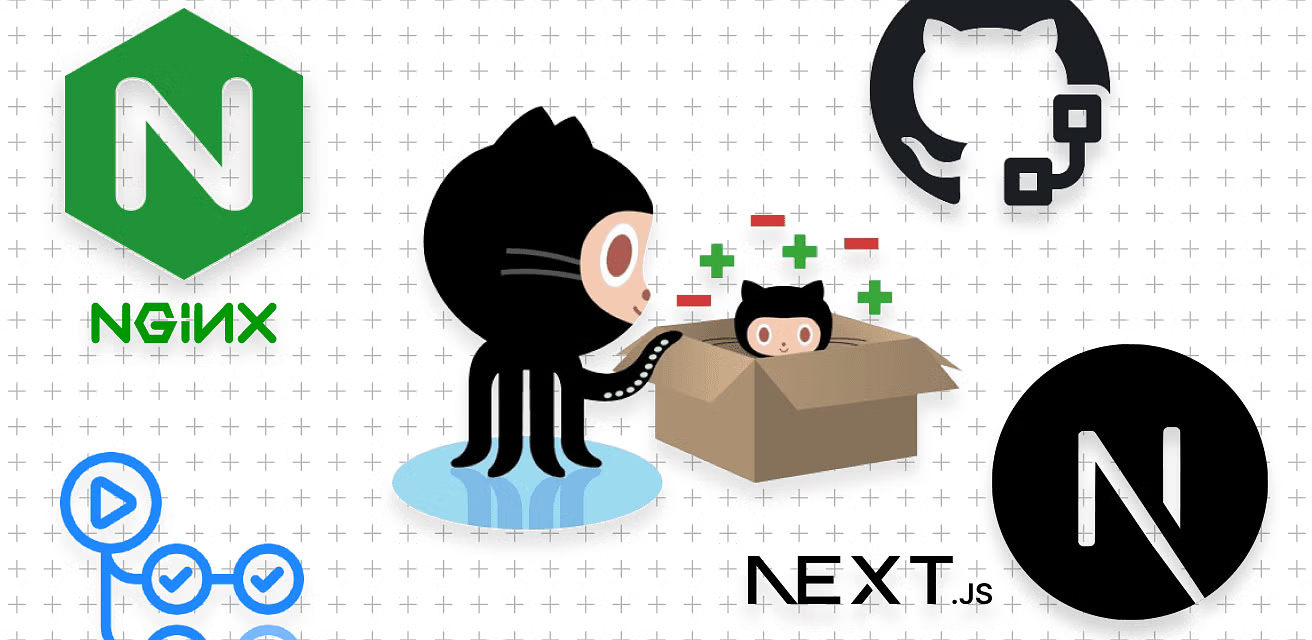

With countless moving parts to juggle, tools that ease the transition from code to shipped product are real game-changers. For me, Vercel's developer experience did just that. It would seamlessly deploy and host my NextJS app (this site!) from a single 'Git push'. The zero-downtime deployments, and knowing it rolls back if something goes wrong gave me peace of mind. I could just focus on the creating, not managing servers!

From Serverless to Self-host NextJS

Although Serverless hosting provides so much convenience, the intricate billing model brought a layer of complexity that ultimately compelled me to revert to self-hosting. After experiencing the awesomeness of Vercel though, I couldn't just go back to an old manual workflow.

This post therefore walks you through how I set up a self-hosted NextJS app with a development workflow powered by GitHub Actions. It gives:

Zero downtime – no downtime between deployments

'Roll back' – if the build fails, use the working version

Auto Deploy - 'git push' to main

Logs – See the View all the build logs and see build progress

You'll also find a couple script files you can copy and paste to create a deploy experience along the lines of Vercel's.

With the recent discussions around serverless pricing vs self hosting, I hope the post saves you some time if you're trying self-hosted NextJS.

...but first, enter Blue Green deployment: 👀

Letter

Blue Green Deployment

Before anything, let's understand the general workflow.

The app will use a 'Blue Green' deployment model, where we'll have 2 copies of the Next app side-by-side. Here's how it works:

2 versions of the same app - one called blue, one called green

1 live version at a given time (say the green one), and changes get deployed to the 'sleeping' version (or the blue one)

If the build is successful, the sleeping app is turned on so is live. The other app is turned off, and is now the 'old version'.

This diagram from Candost's blog illustrates it really well:

Overall it seems safe - if the build fails, nothing happens. There's no downtime as the new build must work before the old one is turned off.

Here's another article that explains blue green deployment in more depth - when I came across the concept, I thought this was all beyond me, but in practice I found it more straightforward.

Let's see how it's done in practice:

Server Setup and Deployment Guide

To get started with this 'blue green' setup, this guide assumes you have a server running, preferably NGINX (but it should be possible with others like Apache). I'm not going to walk you through setting that stuff up, but how to configure it all. The rest of the 'guide' is split into 2 parts:

1. Server Setup

Next Apps: Setting up the 2 NextJS apps (blue and green)

NGINX/PM2: Configure the NGINX server and PM2 processes

2. Deployment Guide

Deploy: Add a deploy script to build the new deployment

GitHub Actions: Configure GitHub Actions to trigger/log the build

Let's begin the server setup with the NextJS app:

Part 1: Server Setup

I started out with a single Next app running on the server using NGINX and PM2 first. So let's do that first, then add the second Next app to create the blue green workflow.

A. Green App

Follow these 3 parts to set up the green app:

Next App (green): The initial app was cloned from the Prototypr GitHub repo (give it a star ⭐) - and the .next folder is built using npm run build. For the deploy script to work, call this folder next-app-green.

PM2 (port 3000): The initial app is running on PM2, using the command below. If you're following my process, make sure your app name is "green", and you specify port 3000. That's important for later!

pm2 start npm --name "green" -- run start -- --port 3000NGINX (proxy_pass): The initial NGINX location block looked like below. It uses `proxy_pass` which points to the PM2 (the process manager) running on port 3000. Make sure you have this working first, then we'll make a couple tweaks for blue/green.

{

location /

{

proxy_pass http://localhost:3000;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_set_header Host $host;

proxy_cache_bypass $http_upgrade;

}

}Be sure your app is turned on by running PM2 ls - it will say 'online' in the status column.

B. Blue app

Here's the bits for the blue app, and to get the blue green concept set up:

Next App (blue): Now clone the same Next app Github repo into a new directory called it next-app-blue (the name is important for the deploy script in part 2)

PM2 (port 3001): start your blue version with the following command. Ensure the --name flag is blue, and the --port flag is 3001:

pm2 start npm --name "blue" -- run start -- --port 3001NGINX (upstream): this is the cool part! With 1 additional block in the NGINX, we can have both apps running at the same time with these 2 changes:

Add an 'upstream' block is at the top, that directs to both of our running PM2 processes (localhost:3000 and localhost:3001).

Change the original proxy_pass to http:// + the name of the upstream block. In this case it is http://bluegreen, but you can call it anything.

upstream bluegreen {

server localhost:3000 weight=1;

server localhost:3001 weight=1;

}

server

{

server_name yourdomain.com;

location /

{

proxy_pass http://bluegreen;

#proxy_pass http://localhost:3000;

...

}

}It's called Load Balancing

This is actually called load balancing - the requests to the domain will be sent to either of your running apps. If one of the apps is stopped, all requests will go to the other running app. Load balancing sounded really complicated to me, but it was done it with those 2 changes. Here's more info on it with NGINX:

Last step for this section: now both apps running, stop one of them using pm2 stop green (or blue). At this stage, running pm2 ls will produce a table like below - 1 app online, the other stopped (doesn't matter which way around):

All the traffic is therefore going through 1 app.

Part 2: Deployment Guide

With that Blue/Green server setup done, there's 2 parts left. You can paste my scripts for both of these, but there's a little bit of configuring to do to set up SSH keys for GitHub:

Deploy Script: Add a deploy.sh script on the server that will create the latest build, and fail gracefully if anything goes wrong.

GitHub Actions: Set up GitHub actions to trigger a deployment when you push to the main branch

1. Deploy Script

This is the script that will be triggered by a GitHub action when you push to your main branch. Find it below- you can copy paste it into a file called deploy.sh (or whatever), and put it in your user's home directory.

The script seems long, but I've added comments to explain what's happening. Here's an overview:

Checks live app: It first determines which app is running in PM2 - if green is running, set the deploy folder to blue. If blue is running, deploy to green folder.

Builds new deployment: Then it navigates to the deployment folder, runs git pull, npm install, and next build. If anything fails, it will display in the console. (if nvm isn't installed you'll get an error)

Starts new deployment: If everything builds without errors, it will start the new app and stop the old one. Otherwise, it will just fail, leaving your live app still running.

If you've set up the folders with the same names as outlined throughout, you'll be able to paste this into your deploy.sh file and it should work with minimal changes. You should test it after you paste it in:

#!/bin/bash

#both app folders start with 'next-app'

LIVE_DIR_PATH=/var/www/html/next-app

#add the suffixes to the app folders

BLUE_DIR=$LIVE_DIR_PATH-blue

GREEN_DIR=$LIVE_DIR_PATH-green

# set up variables to store the current live and deploying to directories

CURRENT_LIVE_NAME=false

CURRENT_LIVE_DIR=false

DEPLOYING_TO_NAME=false

DEPLOYING_TO_DIR=false

# this checks if the green and blue apps are running

GREEN_ONLINE=$(pm2 jlist | jq -r '.[] | select(.name == "green") | .name, .pm2_env.status' | tr -d '\n\r')

BLUE_ONLINE=$(pm2 jlist | jq -r '.[] | select(.name == "blue") | .name, .pm2_env.status' | tr -d '\n\r')

# if green is running, set the current live to green and 'deploying to' is blue

if [ "$GREEN_ONLINE" == "greenonline" ]; then

echo "Green is running"

CURRENT_LIVE_NAME="green"

CURRENT_LIVE_DIR=$GREEN_DIR

DEPLOYING_TO_NAME="blue"

DEPLOYING_TO_DIR=$BLUE_DIR

fi

# if blue is running, set the current live to blue and 'deploying to' is green

if [ "$BLUE_ONLINE" == "blueonline" ]; then

echo "Blue is running"

CURRENT_LIVE_NAME="blue"

CURRENT_LIVE_DIR=$BLUE_DIR

DEPLOYING_TO_NAME="green"

DEPLOYING_TO_DIR=$GREEN_DIR

fi

# if both green and blue are running, set 'deploying to' to blue

if [ "$GREEN_ONLINE" == "greenonline" ] && [ "$BLUE_ONLINE" == "blueonline" ]; then

echo "Both blue and green are running"

DEPLOYING_TO_DIR=$BLUE_DIR

fi

# display the current live and deploying to directories

echo "Current live: $CURRENT_LIVE_NAME"

echo "Deploying to: $DEPLOYING_TO_NAME"

echo "Current live dir: $CURRENT_LIVE_DIR"

echo "Deploying to dir: $DEPLOYING_TO_DIR"

# Navigate to the deploying to directory

cd $DEPLOYING_TO_DIR || { echo 'Could not access deployment directory.' ; exit 1; }

# use the correct node version

# Load nvm

export NVM_DIR="$HOME/.nvm"

[ -s "$NVM_DIR/nvm.sh" ] && \. "$NVM_DIR/nvm.sh" # This loads nvm

nvm use 18.17.0 || { echo 'Could not switch to node version 18.17.0' ; exit 1; }

# load the .env file

source .env.local || { echo 'The ENV file does not exist' ; exit 1; }

# Pull the latest changes

# clear any changes to avoid conflicts (usually permission based)

git reset --hard || { echo 'Git reset command failed' ; exit 1; }

git pull -f origin main || { echo 'Git pull command failed' ; exit 1; }

# install the dependencies

npm install --legacy-peer-deps || { echo 'npm install failed' ; exit 1; }

# Build the project

npm run build || { echo 'Build failed' ; exit 1; }

# Restart the pm2 process

pm2 restart $DEPLOYING_TO_NAME || { echo 'pm2 restart failed' ; exit 1; }

# add a delay to allow the server to start

sleep 5

# check if the server is running

DEPLOYMENT_ONLINE=$(pm2 jlist | jq -r '.[] | select(.name == "$DEPLOYING_TO_NAME") | .name, .pm2_env.status' | tr -d '\n\r')

if [ "$DEPLOYMENT_ONLINE" == "$DEPLOYING_TO_NAMEonline" ]; then

echo "Deployment successful"

else

echo "Deployment failed"

exit 1

fi

# stop the live one which is out of date

pm2 stop $CURRENT_LIVE_NAME;Here it is in a GitHub repo for easier access:

That script was hard for someone who never wrote a bash script before! If you have any improvements, they're welcome.

Before going to the next step, it's a good idea to make sure it works. Log into your server and run it with ./deploy.sh. Fix any errors, and run pm2 ls again to see if the correct app is running.

2. Set up GitHub Actions

After ensuring the deploy script works, and it is sitting in your user's home directory (cd ~/), we now need a GitHub action to run it when pushing to the main branch. You can learn how to do it by pasting my action, or here's a decent guide if you like reading:

Create the deploy.yml workflow file

From the above article, this is a practical part to do now:

From the root of our application, create a folder named .github/workflows that will contain all the GitHub action workflows inside this folder create a file named action.yml, this file will hold the instructions for our deployment process

In my workflow folder, I have a file called deploy.yaml - you can copy its contents to your own yaml file:

Upon inspecting this deploy.yml, you can see in the first few lines that it gets triggered when pushing to the main branch. It also needs these 2 environmental secrets to deploy on your server:

SERVER_HOST: ${{ secrets.SERVER_HOST }}

SERVER_USER: ${{ secrets.SERVER_USER }}

SERVER_SSH_PRIVATE_KEY: ${{ secrets.SERVER_SSH_PRIVATE_KEY }}Let's set that bit up:

Set up your server access secrets

The 3 secrets needed are these:

SERVER_HOST is your server's IP address

SERVER_USER is the username of the user where the deploy.sh script is

SERVER_SSH_PRIVATE_KEY: your user's key to ssh into the server.

Add them to the environments secret section of your repo as shown here:

Steps 1,2 and 3 of the following article by Gourab shows you how to do this part. That's what I followed to set up the secrets, and generate the ssh key needed for SERVER_SSH_PRIVATE_KEY.

That's pretty much it - pushing to the main branch should trigger the deploy script on your server, and you can watch the build progress from GitHub itself. All the logs and progress from the deploy.sh script will be logged in GitHub. I don't think that happens if you use a webhook rather than SSH, which is why I think this is great:

That's all I got for now, didn't expect this post to be so long. Below are some issues I ran into.

Star us on GitHub ⭐

If this helped you, give the repository a star:

I'm trying out this GitHub sponsors thing to make more resources like this, so check that out too if you want to support open source efforts:

Troubleshooting

Here are a couple issues I had connecting to my server from GitHub actions

Host key verification failed:

Once that's set up all should be ready to go, or you might get this error:

Run mkdir -p ~/.ssh/ Host key verification failed.The "Host key verification failed" error typically means that the SSH client was unable to verify the identity of the server. This can happen if the server's host key has changed or if it's the first time you're connecting to the server and its host key is not in your known_hosts file. (copilot response)

I fixed it by adding GitHub to my server's known hosts by following this:

Then added this to the deploy.yaml:

# Fetch the server's host key and add it to known_hosts

ssh-keyscan -H ${{ secrets.SERVER_HOST }} >> ~/.ssh/known_hostsPermission denied (publickey):

Other issues I had when connecting via ssh was was:

Load key "/home/runner/.ssh/id_rsa": error in libcrypto @: Permission denied (publickey).This happened because I pasted the wrong key to SERVER_SSH_PRIVATE_KEY, or pasted it incorrectly by missing out (or including) the ssh-rsa part of the key (can't remember how its should be, but pasting the keys correctly fixed the permission denied issue.

fin.

Buy me a coffee

Buy me a coffee