How Stable Diffusion can inspire creators, and how we can design its UX?

Stable Diffusion by stability.ai is one of a number of exciting AI methods used to generate images from text descriptions. You might have seen its different demos and early use cases flying around social media - from generating avatars and hero images, to even making full music videos.

These AI-assisted creator tools work similarly, providing the user with a number of variations based on a prompt, from which the user chooses the one they like the best.

Sometimes the variations can be completely random and unexpected, which in a creative process can actually be very helpful with many AI tools designed to use this unpredictability as a feature. For example, with a new AI-assisted feature introduced on Notion, you can almost 'collaborate' with AI to get inspired and improve your writing.

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/24199644/CleanShot_2022_11_15_at_15.29.16.png)

Trying out these AI-assisted tools and seeing their results have been interesting and thought-provoking. But as I started to take one step further, and make a simple AI-assisted tool by myself, there were even more self-questions and insights. These were the two main questions that I've pondered upon.

Aside from the results of AI-assisted tools, how do these tools impact the way we think? And as a designer - how can we make the UX of AI tools even better and more useful?

Rather than a ‘how to' tutorial for Stable Diffusion, in this article, I’d like to share learnings and realizations about the questions above, through a practical example where I built my own app called ‘Viewmaker’.

Building ‘Viewmaker’

What I've made is a web app that uses a camera on mobile to enhance the photo with the Stable Diffusion API.

The level of enhancement you can make is way much more powerful than simple editing, and filtering apps, as you can use text prompts to describe how you want to 'recreate' the photo you just took. That's why I’m calling this project ‘Viewmaker’ in comparison to the original role of a camera as a ‘Viewfinder’.

I'll be talking about where you can access it at the end, but before that, I’ll explain some of the decisions and challenges I noticed when building with Stable Diffusion. Let’s start with a story on how Stable Diffusion can be more interesting to the end user.

What makes stable diffusion an interesting tool for creators?

A tool affects the result, but also the approach

Over the weekend or on travel, I carry my film camera, which is heavy to carry and more costly to buy films and develop the photos. I like the unique texture and colors it creates, but more than that, I choose it over other digital cameras, because it changes my attitude toward the world surrounding me.

The heavy weight on my shoulder and the limited number of shots make me aware of every moment that I encounter and be ready for it.

So what does the film camera have to do about the hot topic, stable diffusion? The tool you use for creating something not only affects the result, but also the approach and perspectives toward a subject. In contrast to holding my film camera with conscious attention to subtle moments and good lighting necessary for film, when using smartphone cameras or DSLRs, my attitude and subjects change to things that are better captured in higher resolution, wider angle, and even in video.

Now bringing an AI assistant to the smartphone camera changes that perspective once more, and therefore how the camera is used.

How did ‘Viewmaker’ affect my perspective?

Viewmaker can change the approach to using a camera, as the goal is to augment the picture with creative prompts to create new and different scenes. Some use cases might be an interior designer getting inspiration, or a storyteller creating new and imaginative scenes.

However, in the first few attempts of using it, it was hard to break out from what was in front of my eyes. If I was looking at a plant, I could only think of words like ‘flowers’, and ‘butterflies’. But after I realized this, I started to question myself, ‘what would be the opposite, unexpected, unnatural, and the weirdest thing in the scene I’m looking at?’. From then on, I started thinking completely outside the box while I was on the same road that I go for a run.

We’re excited to see how stable diffusion will increase the efficiency of creating images. After building and trying the app by myself, I’m more curious to see its impact on how it changes creators’ questions toward the world.

I 'like' when the result is different from what I intended.

The model I used, in the beginning, was an outdated version, so even when I had straightforward prompts, the result was far from what I imagined. As I tried out different versions and tweaked the properties, it started to return quite accurate images. Here’re a few early results that didn’t turn out well:

But looking back at images that I made with Viewmaker, I enjoyed this ‘unexpectedness’ from the outdated version. Adding on to earlier points about how Stable Diffusion introduces us to a new perspective, these unexpected images were so intriguing, as they made me question myself further:

‘How come AI interpreted my words into this image?

‘How this image is correlated to my prompts?’

We usually seek an exponential increase in productivity with AI and ML. But this ‘unexpectedness’ can also be something creators can make use of the technology as well. This brings me to how we could make this explorative type of AI tool more user-friendly.

How we can design stable diffusion more user-friendly?

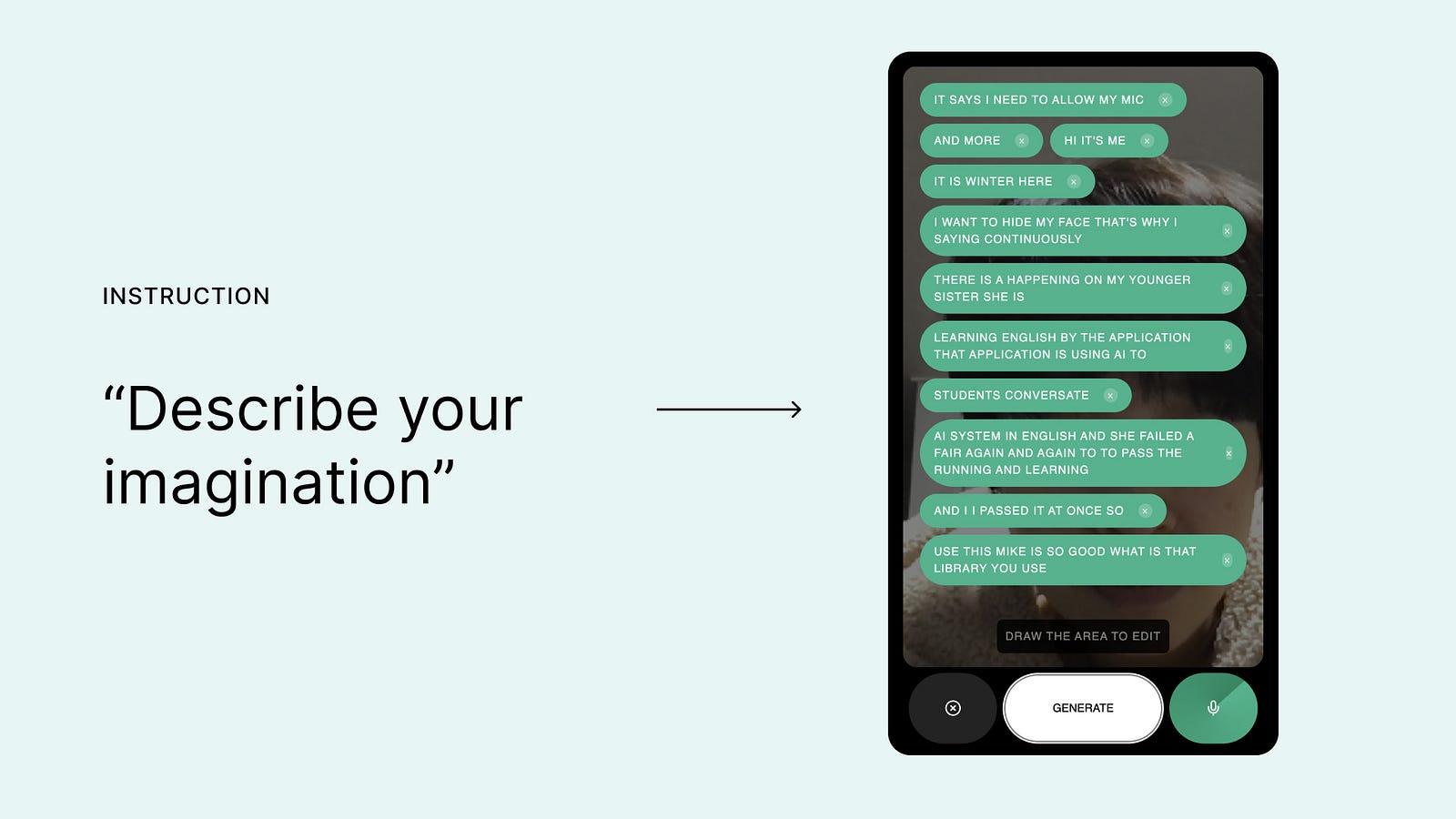

1. What do you mean by describing an image with text?

Stable diffusion gives lots of freedom and flexibility to users to create an image just by describing it in text, but you still need to learn some tricks to get the exact image you want.

When I shared my app with my friend who doesn’t know anything about stable diffusion, she came back to me with the screenshot below, saying it doesn’t work. She was literally ‘describing’ what she imagines in long sentences.

To introduce stable diffusion to general users, we have to keep in mind that prompt engineering is still quite far from natural language. Instead of ambiguously saying ‘describe what you imagine’, we need to provide more specific guidelines on how to choose the right words.

2. Providing full control might not mean ‘better’

There’re quite many properties you can tweak to control the result from stable diffusion than prompts and image themselves. But instead of providing the full flexibility to control the result which can be confusing, selecting the most relevant properties that suit users’ needs and purposes will simplify the experience.

And even some prompts can be provided as default as well. For example, since Viewmaker is using a photo as an input, when the result involved a flat drawing or an illustration, it didn’t go along with the base image. So I added a few default prompts, like ‘HD, detailed, photorealistic’. Also, it helped to add a lens spec, as other articles introduce as a way to improve the quality of the result. (Kudos to Elenee Ch on a great article!)

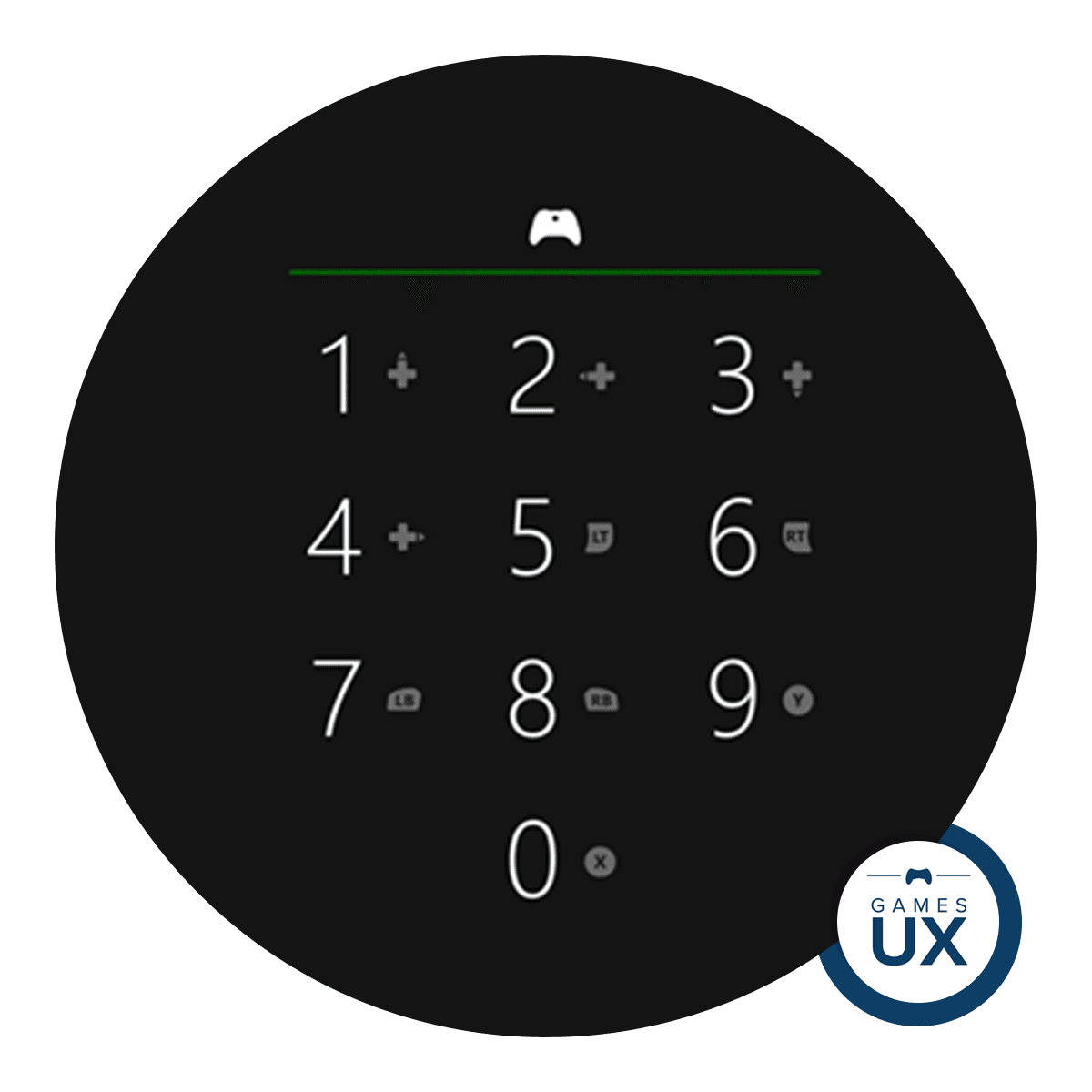

3. More to explore - what's the ideal prompt interface?

The interfaces and interactions to use stable diffusion should be designed with consideration of the context. In the first version of ‘Viewmaker’, I used text input as most of the stable diffusion interfaces do. But it didn’t take long to realize that it’s not ideal for mobile and for inpainting, as the keyboard will take almost half of the screen and block the image. That’s why I replaced it with voice recognition, as I felt it’s better to keep looking at the image to come up with prompts.

But there’s also a downside to using voice to add prompts. Even for me, I was not fully confident in saying words out loud when other people were around me. This is something that I’d like to improve later on.

Outro, and the next step

In all, the interesting part of many AI-assisted tools is that the output is often unpredictable, which can inspire the user to explore ideas they wouldn’t have thought of, or from a totally different angle. At the same time, different users interact with AI in different ways by providing prompts that only they may have thought of. So it becomes an interesting problem for designers and developers to prototype and improve the experience.

It's also interesting that as there's a really low, or no learning curve to use AI-assisted tools, an approach and the result will really vary depending on 'who' uses it for 'what'.

While playing around with Viewmaker, I could think of many types of creators who’d find this tool useful. From a fashion designer visualizing ideas from impromptu inspiration on the street, an interior designer or architect bringing imagination to life and sharing with clients on the spot, or a storyteller imagining the most unexpected narrative that can happen in the space… It’d be interesting to hear more people’s thoughts about how this tool changes your perspectives toward your subject.

Although 'Viewmaker' is still in ‘work-in-progress’ status with pending improvements in mind, I’m open to sharing this with more people. If you’re an artist, designer, photographer, or any type of creator interested in trying what I’ve built, please reach out to me via Instagram or my email.