Build Design Systems With Penpot Components

Penpot's new component system for building scalable design systems, emphasizing designer-developer collaboration.

uxdesign.cc – User Experience Design — Medium | Bushra

This article is an offshoot of the ongoing series ‘A Quick Guide to Designing for AR on Mobile.’ Catch up on Part 1, Part 2 and Part 3.

This article is not intended to teach (or even encourage) designers to code. My goal is to get you comfortable with the emerging AR landscape and give you insight into how an AR app is made.

Hardware and software are easy to overlook when focusing on design and experience. However, when working in new and emerging tech, knowing how things work can help inspire and even bring innovation to this space.

The following is a high-level breakdown of the different parts that make an AR application work on a mobile device.

Before we start, it’s probably worth understanding how a device is able to run in the first place.

All computers have a processor which serves as the brain of a device; it is the part that makes things work by performing mathematical operations.

Processors come in the form of chips, and these chips contain several hardware units. The hardware unit that reads and performs a program instruction is called a core.

Put a few cores together, and you have CPU (Central Processing Unit). A CPU processes information step-by-step.

Put a few THOUSAND cores together, and you have a GPU (Graphics Processing Unit). GPU processes in groups parallel to each other at the same time.

The GPU renders a graphic in several parts rather than in one piece. The GPU also increases frame rates and makes things look better and smoother in general.

Mobile devices have what’s called a SOC (System on a Chip), a single chip that packs and condenses all the large parts of a desktop device together, such as the CPU and GPU.

Rendering in 3D is an expensive task and therefore needs a lot of power. The more intensive a job is, the more heat a device produces. Balancing this temperature impacts how powerful a device can physically be. Most high-performance computers come with cooling mechanisms such as fans and even liquid coolers to prevent meltdowns.

Since mobile phones need to be as lightweight and portable as possible, these devices cannot physically afford add-on parts for cooling and thus are limited in power. This is the main reason why 3D graphics that would otherwise be smooth on a desktop, takes a hit in performance and quality when experienced on mobile.

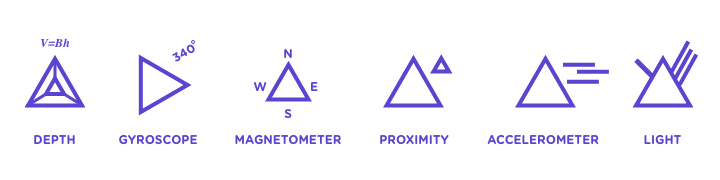

The process of a computer acquiring, analyzing and processing data from the real world is called Computer Vision. The most common method of ingesting information is through a camera. However, the most accurate way is to use sensors. A sensor is a piece of technology that detects information from its surroundings and then responds back with data.

These definitions are high level so it should be noted that each sensor has a wide range of capabilities by themselves and in combination.

It’s one thing to gather data but another to make it meaningful. For example, calculating the distance between a phone and a door can be easy with a sensor. However, trying to identify the door can be extremely difficult.

The computer must understand what makes a door different from a wall or a window? It must also comprehend the different types of doors that it is likely to encounter.

An open-source software library like Tensor Flow by Google makes it easy and fast to train a computer to understand what common objects like a door is.

DEFINITIONS

Similar to 3D, AI can also be an extremely process intensive task on a phone. To help maximize performance, device manufacturers like Apple have started to introduce a piece of hardware unit called a “Neural Engine”. This is a specific piece of technology that allows for faster performance of AI related tasks.

The following definitions are flexible and continuously evolving based on the developer landscape.

Engines can come in several forms. A gaming engine like Unity has its own interface for real-time 3D authoring, but it can also be embedded inside an application. A rendering engine like V-Ray comes as a plug-in for other 3D applications. Software can also have multiple engines depending on the users’ needs. The following are examples of common engines:

These tools make it so that a developer doesn’t have to code an application from scratch each time. Frameworks also offer crucial features that may require specialized knowledge to code.

There are tons of AR SDK’s and frameworks out in the market at the moment (and the list is only growing!). It is important to note that the platform directly impacts the technology that gets used. Some kits can accommodate several platforms, whereas others are made for a single platform and/or device only.

Designing for mobile is currently a surefire way of making sure an experience gets into the hands of the most users possible. Not everyone has smart glasses right now, but nearly everyone has a phone. However, a mobile phone was not necessarily designed for AR experiences and therefore has a lot of points of friction.

Unlike phones, glasses are a more seamless way of experiencing an environment since it locks the content to your peripheral. Glasses make it easier to use gestures and freely move your hands, this introduces a new set of interactions that designers have already started to explore.

There is a race for consumer-friendly AR headsets, and the number of manufacturers is on the rise. It’s only a matter of time before an affordable headset makes waves in the consumer market.

With so much happening in this space, design and experience will continue to be the differentiator between these products.

Thank you for reading, If you have any additional thoughts, would like to suggest a topic or just chat about the future of design: You can reach me via twitter or on my website.

Special thanks to Devon, Brendan, Dylan and Bhautik for the help and insight.

A designer’s guide to hardware and software for mobile AR was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.

AI-driven updates, curated by humans and hand-edited for the Prototypr community