Build Design Systems With Penpot Components

Penpot's new component system for building scalable design systems, emphasizing designer-developer collaboration.

Facebook Design — Medium | Jeff Smith

By Jeff Smith, Product Designer, Grace Jackson, User Experience Researcher, and Seetha Raj, Content Strategist

Updated treatment for misinformation in News Feed using Related Articles

Updated treatment for misinformation in News Feed using Related Articles

Scrolling through News Feed, it can be hard to judge what stories are true or false. Should you trust everything that you see, even if it comes from someone whom you don’t know well? If you’ve never heard of an article’s publisher, but the headline looks legitimate, is it?

Misinformation comes in many different forms and can cover a wide range of topics. It can take the shape of memes, links, and other forms. And it can range from pop culture to politics or even to critical information during a natural disaster or humanitarian crisis.

We know that the vast majority of people don’t want to share “false news.” And we know people want to see accurate information on Facebook, but don’t always know what information or sources to trust—this makes discerning what’s true and what’s false difficult.

After a year of testing and learning, we’re making a change to how we alert people when they see false news on Facebook. As the designer, researcher, and content strategist driving this work, we wanted to share the process of how we got here and the challenges that come with designing against misinformation.

In December of last year, we launched a series of changes to identify and reduce the spread of false news in News Feed:

Disputed flags, our previous treatment for misinformation in News Feed

Disputed flags, our previous treatment for misinformation in News Feed

Over the last year, we traveled around the world, from Germany to Indonesia, talking with people about their experiences with misinformation. We heard that false news is a major concern everywhere that we’ve been, and even though it is a very small share of what’s shared on Facebook, reducing the spread of false news is one of our top priorities.

Our goal throughout this research was to understand not only what misinformation looks like across different contexts, but also how people react to designs that inform them that what they are reading might be false news. To ensure that we heard from people with a variety of different backgrounds, we visited people in their homes, talked with people during their everyday lives, and conducted in-depth interviews and formal usability testing in a lab with people alone, with their friends, or with a bunch of strangers. In conducting this research, we found that there were four ways the original disputed flags experience could be improved:

In April of this year, we started testing a new version of Related Articles that appears in News Feed before someone clicks on a link to an article. In August, we began surfacing fact-checked articles in this space as well. During these tests, we learned that although click-through rates on the hoax article don’t meaningfully change between the two treatments, we did find that the Related Article treatment led to fewer shares of the hoax article than the Disputed Flag treatment. We’ve also received positive feedback from people who use Facebook and found that it addresses the limitations above: it makes it easier to get context, it requires only one fact-checker’s review, it works for a range of ratings, and it doesn’t create the negative reaction that strong language or a red flag can have.

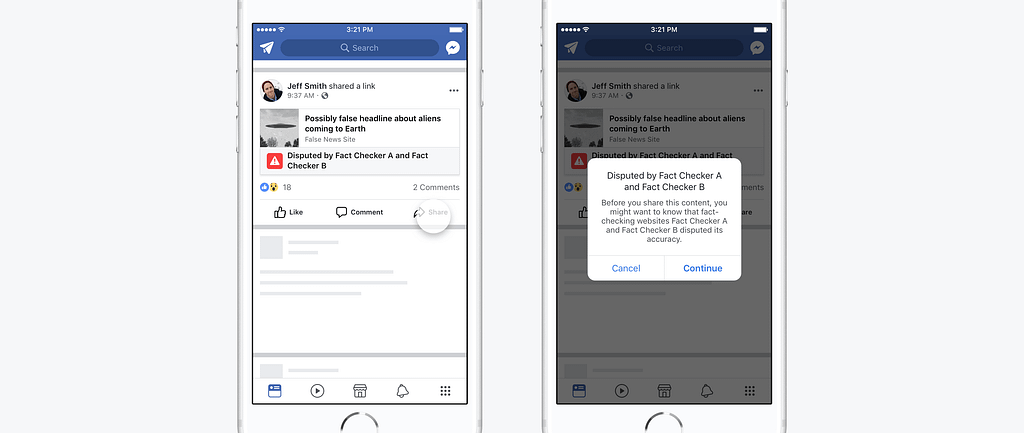

Updated treatment for misinformation in News Feed

Updated treatment for misinformation in News Feed

Academic research supports the idea that directly surfacing related stories to correct a post containing misinformation can significantly reduce misperceptions. We’ve seen in our own work that Related Articles makes it easier to access fact-checkers’ articles on Facebook. It enables us to show fact checkers’ stories even when the rating isn’t “false” — if fact-checkers confirm the facts of an article, we now show that, too. Related Articles also enables fact-checked information to be shown when only one fact-checker has reviewed an article. By reducing the number of fact-checkers needed to weigh in on an article, more people can see this crucial information as soon as a single fact-checker has marked something as false. And because many people aren’t always aware of who the fact-checkers are, we’ve placed a prominent badge next to each fact-checker so that people can more quickly identify the source.

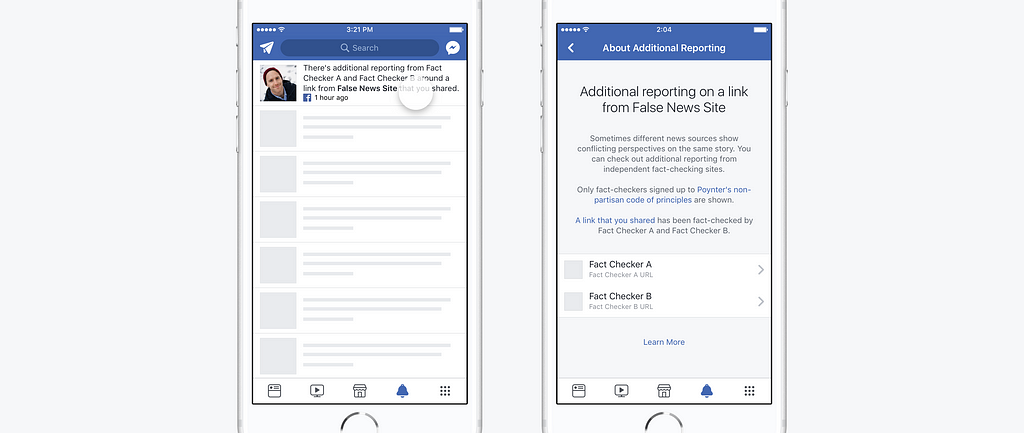

While we’ve made many changes, we’ve also kept the strongest parts of the previous experience. Just as before, as soon as we learn that an article has been disputed by fact-checkers, we immediately send a notification to those people who previously shared it. When someone shares this content going forward, a short message pops up explaining there’s additional reporting around that story. Using language that is unbiased and non-judgmental helps us to build products that speak to people with diverse perspectives.

We’ve kept the strongest parts of the previous experience, including notifications for shared content that later gets flagged by a third-party fact-checker

We’ve kept the strongest parts of the previous experience, including notifications for shared content that later gets flagged by a third-party fact-checker

When people scroll through News Feed and see a questionable article posted from a distant contact or an unknown publisher, they should have the context to make informed decisions about whether they should read, trust, or share that story. As some of the people behind this product, designing solutions that support news readers is a responsibility we take seriously. We will continue working hard on these efforts by testing new treatments, improving existing treatments, and collaborating with academic experts on this complicated misinformation problem.

Designing Against Misinformation was originally published in Facebook Design on Medium, where people are continuing the conversation by highlighting and responding to this story.

AI-driven updates, curated by humans and hand-edited for the Prototypr community