Build Design Systems With Penpot Components

Penpot's new component system for building scalable design systems, emphasizing designer-developer collaboration.

medium bookmark / Raindrop.io |

Click here to see the original post by Associate Director of Marketing Josh Strupp.

Note: If you have little to no knowledge of AR, read this first: Augmented Reality: Everything You Need to Know for 2018

Over a billion people (between Android, iOS11, Facebook mobile app, and now Snapchat users) have access to rich AR experiences on their mobile devices. Add Amazon AWS’ product to the picture and suddenly it’s anyone with access to a modern web browser can get their hands on AR.

There are 5 major tech companies that, in one year, released 5 powerful platforms designed to create AR experiences. How are they different? What’s the advantage of using one over another? Which is best for agencies? Brands? Hobbyists? Developers?

In this post we go under the hood. As it goes with most modern consumer technology, new, competing platforms with nuanced differences influence the future of the product. Now, let’s get nuanced.

Overview: Apple throws around fancy terms like “visual inertial odometry” and made up words like “TrueDepth” when describing ARKit. While this terminology may seem like a marketing gimmick (it is) and these features unique to Apple and only Apple (it aren’t), the technology itself is extremely impressive.

Boring but Important: ARKit was announced at WWDC in June 2017. AR experiences made with the platform work on any Apple mobile device that’s running iOS11 and powered by an A9, A10, or A11 chip.

Key Features: ARKit — like its competitors — includes both facial tracking and world tracking. The real differentiators come tied to the hardware. ARKit can use the iPhone’s camera sensor to estimate the total amount of light in a scene, then apply estimate shading and texture to virtual objects. Google can do this too, but because of the variability in device features and operating systems across Android devices, creating a consistently efficient, adaptive experience is difficult. Also, the A11 is king.

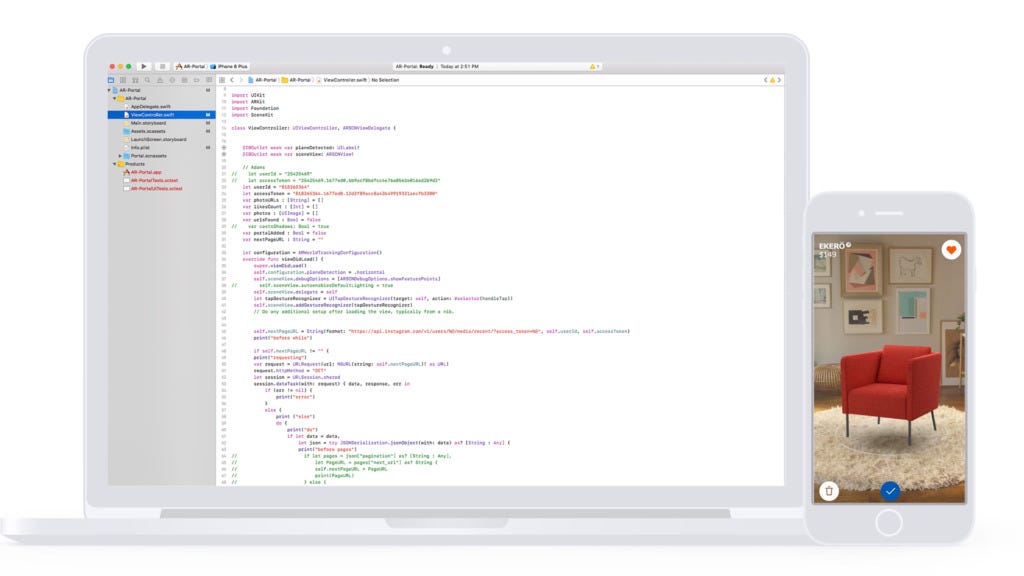

Bottom Line: Apple has once again made an extremely promising product that’s restricted to Apple users (ARKit experiences must be, with a few exceptions, built in Apple’s desktop development environment Xcode 9 using Apple’s coding language Swift and Apple’s 3D framework SceneKit for — you guessed it — Apple devices only). That said, what’s possible in ARKit is anything but restricted. There’s been an organic MadeWithARKit movement where developers have created portals, games, entertainment, consumer, and social applications. And while they haven’t advertised it (except as Animojis and Face ID), ARKit has built in frameworks for facial augmentation. Expect Apple’s technology to encroach on Facebook’s competitive advantage soon.

Finally, we’ve made a portal of our own using ARKit. Check it out.

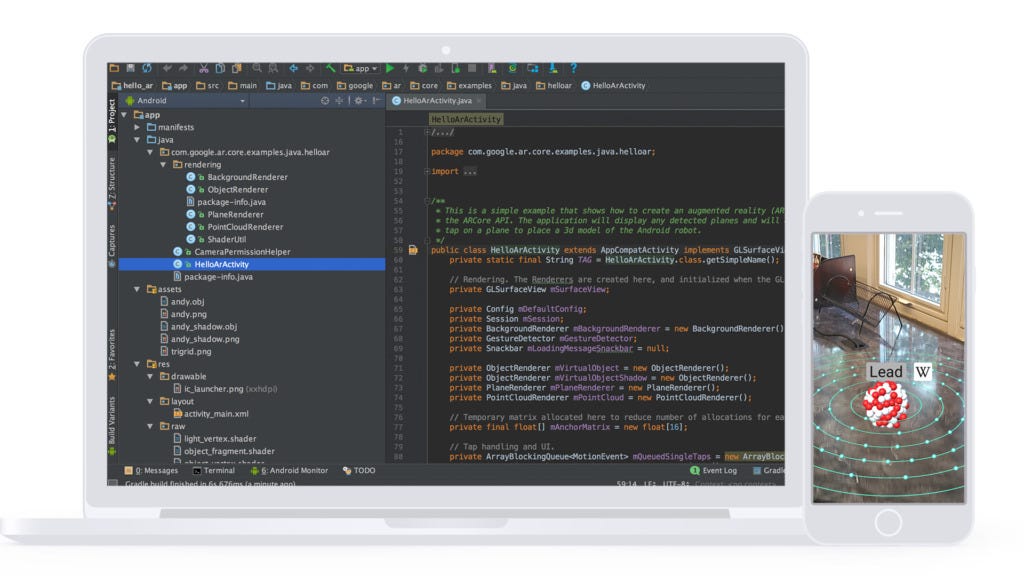

Overview: Apple made a mistake not claiming the name “ARCore” first (…Apple Core. Do you get it? Of course you do). The tech is more or less identical to Apple’s — surface detection, spatial orientation, and light estimation are all core to the functionality (okay I’ll stop). The real difference comes from how each company markets Kit/Core. When Apple introduced ARKit, there were gaming and retail demos. Google, on the other hand — in typical Google fashion — is all about using AR to make information more contextual and accessible. On ARCore’s site, Google touts the tech’s ability to “annotate a painting with biographical information about the artist.”

Boring but Important: ARCore was announced at I/O in May, 2017. As of this article’s publish date, the platform runs on Android devices with the N OS or later, and for developer previews only works with the Google Pixel, Pixel XL, Pixel 2, Pixel 2 XL, and Samsung Galaxy S8.

Key Features: Apple showcased ARKit applications in a silo — there was little mention of how AR will mesh with the phone’s other features from Face ID on the front end to their machine learning framework Core ML for developers. We know it’s intertwined, but Apple failed to advertise this. Google didn’t. There’s integration with VR building tools Tilt Brush and Blocks to create 3D objects, as well as their “VPS” technology (like GPS but for smaller, indoor spaces). But what’s more interesting is the potential for integration with Google Lens, an app designed to bring up relevant information using visual analysis. Those developing ARCore are “working very closely with the Google Lens team.” Imagine using Google Lens to recognize a pair of shoes you’re considering purchasing. The app checks to see what colors are available in stock. From there you can use AR to see how they look in a variety of colors. Like. Freaking. Magic.

Bottom Line: Again, technically speaking, ARKit and ARCore are more or less the same. Our first glimpses at this tech will be simple AR extensions of already-popular apps (e.g. your Ikea app now includes AR couch-placement) and ARCore apps are restricted to Android devices. But Google is giving us a glimpse into how they’ll differentiate themselves. First, they already offer SDKs for 5 development environments (Java, Unity, Unreal, C, and Web — it should be noted that ARKit can work in 3rd party tools like Unity, but Google’s got its own documentationwhile Apple has an “experimental” plugin). Second, they announced an AR sticker feature built directly into the phone’s camera (appropriately called AR Stickers). And finally, again, the potential of ARCore with Google Lens and Google Search is extremely promising.

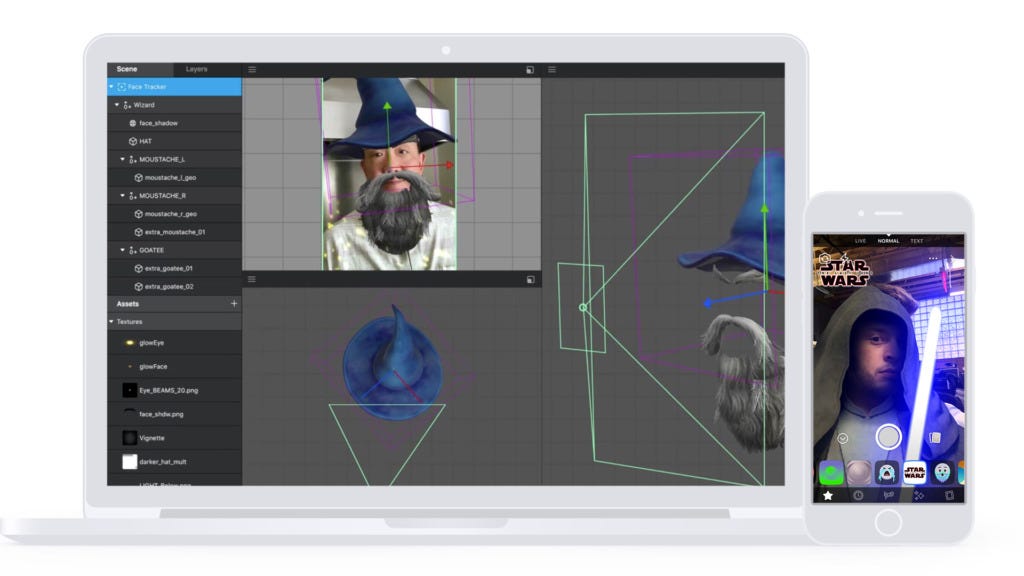

Overview: Facebook’s AR product is dramatically different from Apple or Google — and this makes sense. While GOOG/AAPL/FB all fall under the umbrella of “tech company,” Facebook is social-first. Also, they have a track record for stealing Snapchat’s thunder. Naturally, the result was AR Studio, an integrated desktop application for developers to create augmented reality experiences for the Facebook camera that — here’s the kicker — are mostly front-facing. No surface detection (yet), no light estimation (yet), just a ton of features that make selfies more shareable.

Boring but Important: AR Studio was announced in April, 2017 during F8 (Facebook’s annual developer conference). In December, the company announced that AR Studio was officially available to everyone — previously it was only available to a select group of brands and agencies. While anyone can create an AR Studio effect, Facebook acts as a gatekeeper in terms of what effects are displayed to a user (which is an important contrast from Snapchat’s platform). They will likely change depending on demographic information, location, branded effects, and more.

Key Features: High-level, it’s facial augmentation that sets AR Studio apart. Snapchat Lens Studio allows creators to build what they call “World Lenses,” i.e. effects that sit in the world around you, not on your face. They’re reserving that for brands and select agency partners only. Built into Facebook’s platform are facial cues to trigger animations (e.g. raise your eyebrows), segmentation to separate people from the background (think green screen), particle systems (a fancy, scripted animation), and a LiveStreaming module that pings you when someone uses your effect on Facebook Live.

Bottom Line: Facebook AR Studio is for faces. Period. It’s a matter of time before the competition releases their own version, but Facebook has a head start. And while Google and Apple use programming-centric methods of development, Facebook AR Studio looks and feels more like a motion graphics or 3D modeling software with an intuitive graphical interface (it’s almost identical to Snapchat Lens Studio, by the way). For now, you can only use effects within the Facebook App, but expect to see them integrated into the entire Facebook ecosystem (i.e. Instagram, WhatsApp, etc).

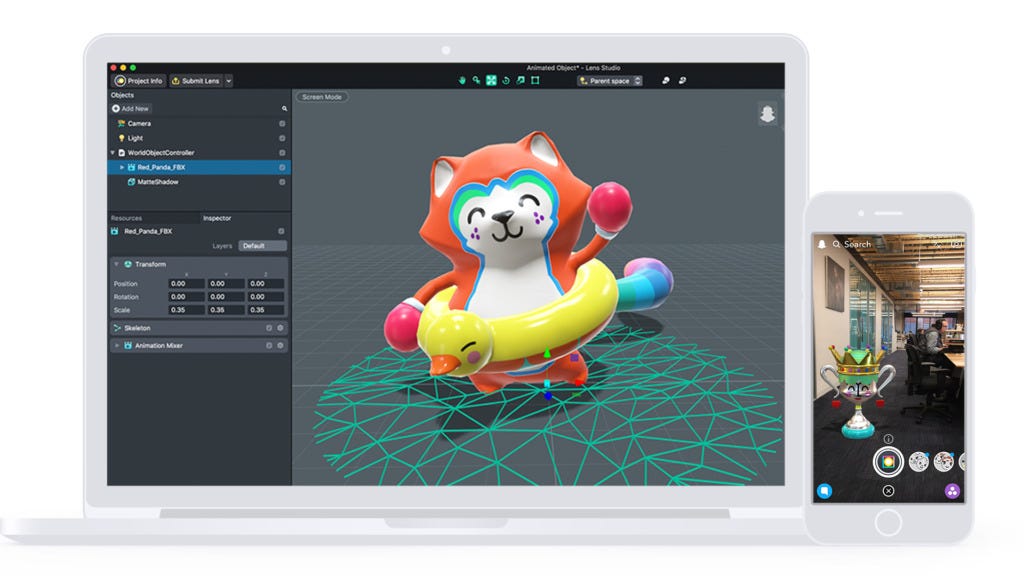

Overview: While Facebook’s AR Studio is all about enabling everyone to create facial augmentations, Snapchat — contrary to what you’d think — is not. Snapchat Lens Studio is open to everyone, but you can only create “World Lenses” (i.e. digital objects around you, no cat ears or giant watery anime eyes). It’s safe to assume that they’ll open “Face Lenses” up to developers eventually, but for now that’s restricted to brands and select agency partners.

Boring but Important: Snapchat made the announcement about Lens Studio in December, 2017 (months after most of the competition). Maybe this was always in the cards, or the competition got to them, but in any case Snapchat’s public release of Lens Studio means less development time and more ad spend (though they relinquish the massive fees — starting at $300k — for creating branded lenses). Don’t fret, there’s still money to be made (see below).

Key Features: One word: Snapcodes. You’ve seen these before. After you finish creating your lens, you submit it to Snapchat, who — should they approve it — generates a QR code. Snapchat users scan this “Snapcode” (also available as a link) to unlock the lens. This is free for consumers like you and me; brands and advertisers can pay to distribute them at a cost per thousand impressions that ranges from $8 to $20. Beyond Snapcodes, the software includes a lot of what you see in Facebook’s AR Studio (e.g. scripting, audio, animations, occlusion, etc).

Bottom Line: Of all the AR platforms out there, Snapchat’s feels like the only thing close to a “turnkey” solution; it’s the only one with a clear user journey from concept to broadcast. Out of the box you get templates that guide you to creating your experience (e.g. static object, animated object, interactive, 2D effects). Once you’re done, there’s a “Submit Lens” button in the nav. It’s that simple. Finally, unlike Facebook where you have to download a separate app and Google/Apple where you test in a dev environment, with Snapchat it’s as easy as opening Snapchat and scanning the Snapcode generated in Lens Studio.

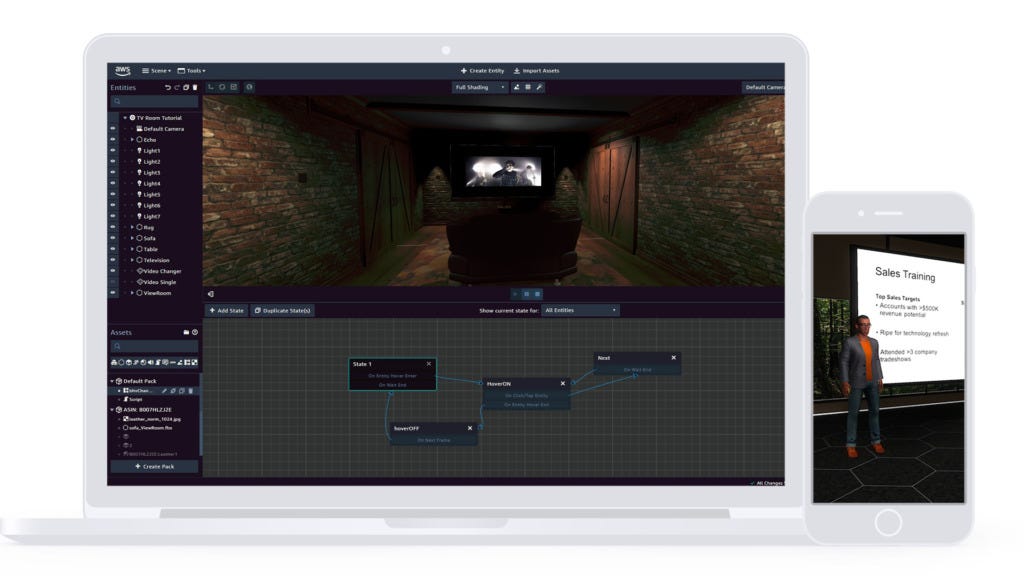

Overview: Sumerian is Amazon’s response to the AR/VR platform race, but at first glance you might not make the connection to the kits, cores, and studios of the world. While other tech giant’s are targeting creators (i.e. developers, designers, hobbyists, agencies, and brand marketing teams), Amazon is targeting corporations. The product is described as a solution to many logistical problems global companies face — expensive employee education, inefficient training simulations, and field service productivity. In other words, use Sumerian to eliminate overhead! Note: they don’t explicitly say the product is for large companies, but it’s an Amazon Web Services product. Sumerian is listed amongst dozens of storage, database, development, and security services, and companies using AWS include Netflix, Intuit, Hertz, and Time, Inc. It’s safe to say this product is for the big guns.

Boring but Important: Sumerian was announced in November, 2017 as part of an AWS event. Like many AWS products, it’s free to use; you pay only for the storage for what you create. As for the name, Sumerian is the language spoken by Sumer people of Mesopotamia. It’s considered to be the basis for many spoken languages used today. Amazon hopes that Sumerian can be the basis for all AR/VR/MR development today by being platform and programming-language agnostic.

Key Features: Sumerian’s real differentiator: it’s “platform-agnostic”. Unlike the four platforms above, Sumerian is aiming to be the blood type O of the AR development world — you can run whatever you build on Rift, Vive, iOS devices (yes, there’s support for ARKit’s framework) and — soon — Android devices. This is possible because you edit in-browser. Sumerian uses WebGL and WebVR libraries (snippets of pre-written code that makes it easier to render 2D and 3D graphics in-browser), so you can import, edit, script, and storyboard immersive 3D scenes using one central, universal language. There’s one more feature that’s worth mentioning: Hosts. Hosts are like creepy, highly intelligent Sims characters. They’re integrated into the platform, where you can design them much like you would a video game character. Integrated with two of Amazon’s AI tools (Lex and Poly), Hosts are there to guide you through different scenarios (i.e. they’re there to replace onboarding managers, trainers, consultants, educators, etc).

Bottom Line: Sumerian is in the same universe as its competitors, but while Snap, Apple, Google, and Facebook share a world where consumer is king, Amazon chose a nearby planet where enterprises rule. It’ll be interesting to see how developers choose their platforms — while they all do roughly the same thing — AWS is clearly looking to be a business solution rather than an entertainment/creative gimmick.

Be Critical: Does your application really need augmentation? Remember when IoT became the buzzworthiest thing in tech? First it was practical, then came the internet-connected cups, crock pots, and credit cards. We (being humanity) will inevitably make the same mistake. But if you’re reading this right now, really ask yourself: “does my idea/product/app needs and augmented reality component?” If you answered yes, move to the next hot take.

Be Different: With any app, it’s hard not to reinvent the wheel. How many times have you heard, “I have a really great app idea” and suddenly find yourself in the middle of an elevator pitch for a note-taking app or an “Uber but for X” concept. Blech. The same goes for AR. Once you’ve identified a useful, feasible AR component, figure out how you can differentiate — interactive elements, high quality 3D design, narrative user flows, and audio integration are just a few ways to stand out. If you’re still with me, move to the third and final hot take.

Be Considerate: Details. Details. Details. You can go with any platform and concept the best damn application of AR since rainbow vomit, but it won’t matter without a quality designer. This is the missing detail: the SDKs and user interfaces can make it easy for a developer or hobbyist to build an AR experience, but to make one that’s really impactful it needs to be beautiful. In other words, it needs to augment reality by blending in with reality, and that requires some real 3D design. Don’t overlook this detail.

Get in touch: [email protected]. Cheers!

AI-driven updates, curated by humans and hand-edited for the Prototypr community