Build Design Systems With Penpot Components

Penpot's new component system for building scalable design systems, emphasizing designer-developer collaboration.

UX Planet — Medium | Vichita Jienjitlert

To celebrate #WorldUsabilityDay theme of Inclusion through User Experience, I’d like to share a project from my Inclusive Design class last semester. My partner and I worked together to design a technology to help alleviate problems faced by people with low-vision during the grocery shopping experience. We worked closely with our participant, Em, through participatory design.

My Role: Led participatory design sessions. Created Tablet lo-fi prototype. Implemented the Smartwatch hi-fi prototype. Created storyboards.

Duration: Spring 2017, INST704 Inclusive Design in HCI

Tools & Methods: Participatory Design, User testing, Invision, Arduino, AdaFruit Flora

Team Members: Lisa Rogers

For our first interview with Em, we focused on getting to know one another, establishing rapport, and introducing the project. We discussed several potential project ideas and let Em share with us her everyday problems. After contemplating several alternatives, we decided to dive deeper into the grocery shopping experience.

Our Participant

Em is a graduate student who travels around daily with her guide dog, Rem. She developed low-vision in her childhood due to Stargardt’s disease — a progressive degeneration of a small area in the center of the retina that is responsible for sharp, straight-ahead vision. Because she likes to select her own produce, she prefers to visit her usual grocery store in-person on a weekly basis.

Problem Overview

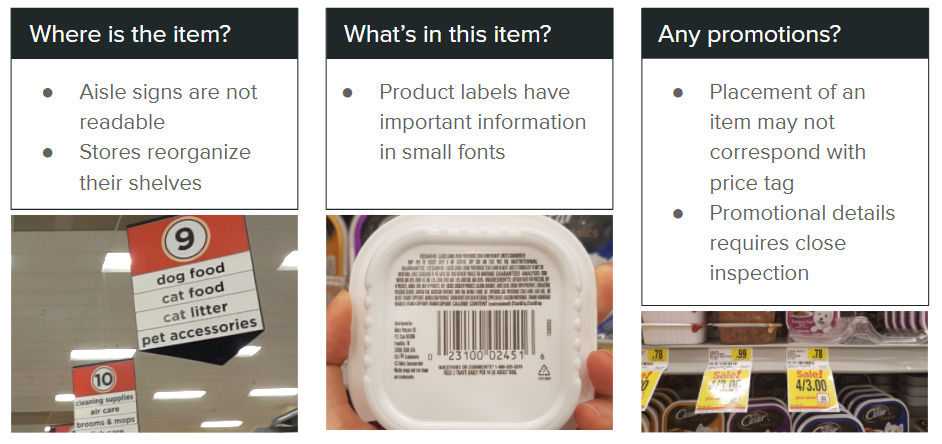

From our initial interview session, we’ve learned how difficult it is for people with low vision to do grocery shopping at the store. With each visit, people with low vision often face difficulties in finding an item in the store, finding the price and available promotions of the items, as well as the details printed on the item such as nutritional information, ingredients, or allergens.

Problems reported by our participant’s grocery shopping experience with low-vision.

Problems reported by our participant’s grocery shopping experience with low-vision.

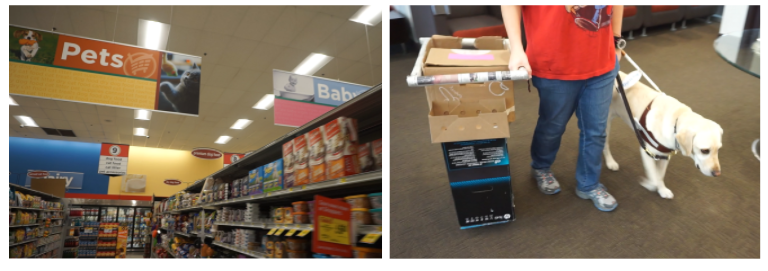

Context of Use

Our technology is to be used in the grocery store. Because our participant always bring her guide dog with her, Em’s left hand would be holding the harness while her right hand pulls the shopping cart behind or to her side.

Grocery store environment (left) and a demonstration of how Em handles the cart (right).

Grocery store environment (left) and a demonstration of how Em handles the cart (right).

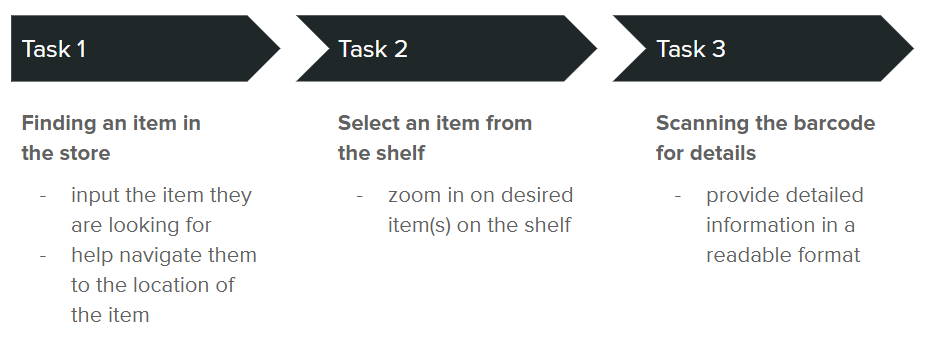

To begin our ideation process, we have defined 3 representative tasks to be supported by our solution. These tasks aim to address the problems we discovered from our interviews.

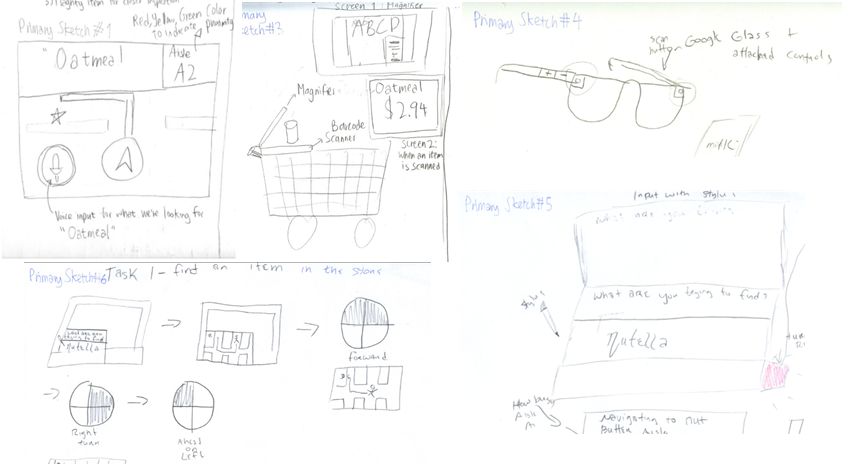

After having a list of primary tasks, we created primary sketches for potential solutions. We explored several different system input methods (handwriting, voice, touchscreen interfaces) as well as a variety of output methods (screen monitor, smartwatch, light signals, AR glasses).

Examples of our Primary Sketches.

Examples of our Primary Sketches.

After weighing the pros and cons of our initial ideas, we have decided to build upon primary sketch #1 (tablet) and primary sketch #6 (smartwatch).The mounted-tablet prototype provides a large screen for Em to easily read, while not burdening her with additional things to carry. The Smartwatch is a wearable alternative that would not take up hand space. We sketched out how each prototype would be able to support our 3 tasks.

Examples of our Secondary Sketches.

Examples of our Secondary Sketches.

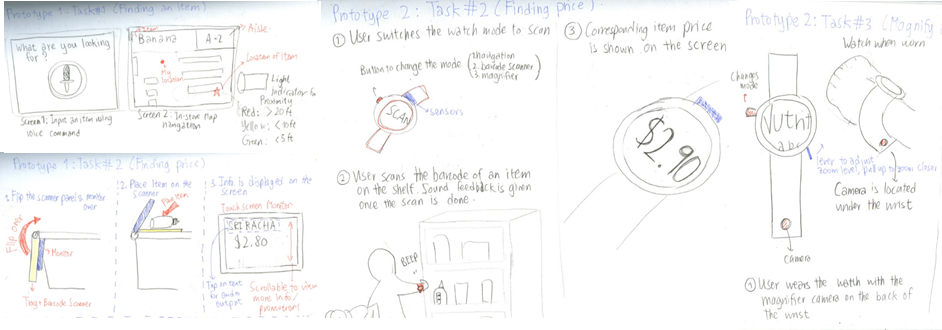

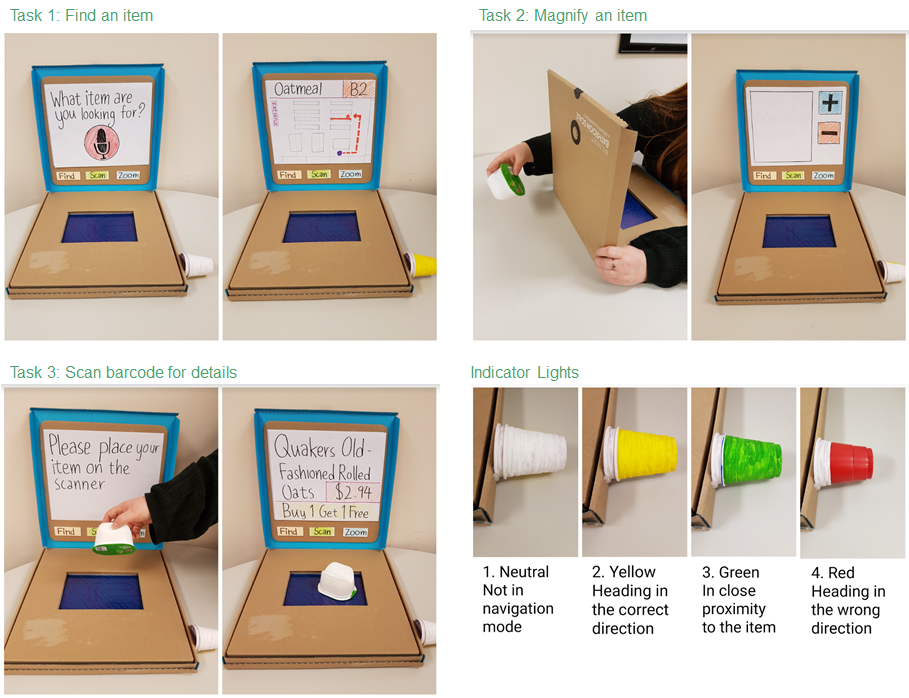

Prototype 1: Tablet

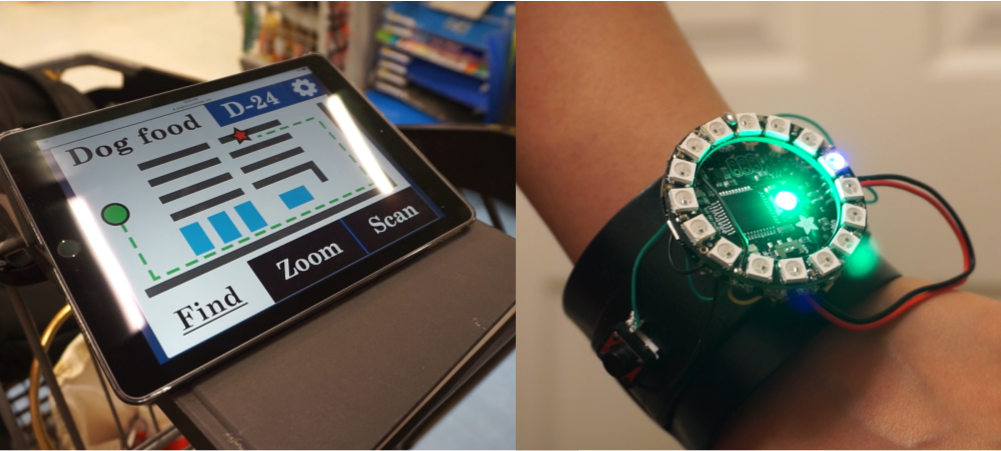

The first prototype is a tablet-based solution that can be mounted onto the shopping cart. It takes voice input, has a camera at the back, and a scanner in the front. The device also provide indicators to tell whether the user is currently heading in the right direction.

Tablet Lo-fi Prototype

Tablet Lo-fi Prototype

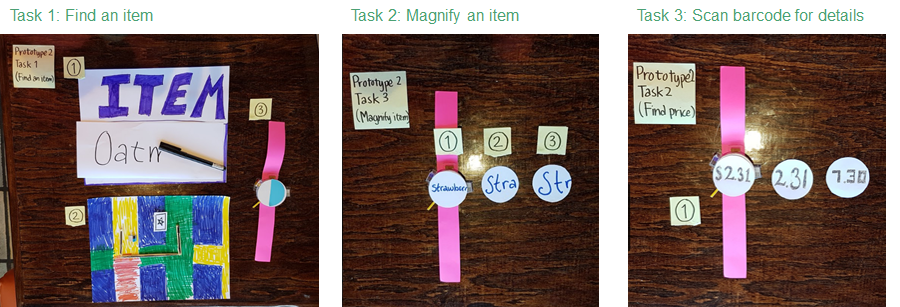

Prototype 2: Smart-watch

The second prototype is a wearable solution that the user can wear on the same hand she holds the service dog with. It also has a separate component for handwriting input and screen. Buttons are used for switching between modes, while the toggle is for adjusting the zoom level.

Smartwatch Lo-fi Prototype

Smartwatch Lo-fi Prototype

Lo-fi Prototype Evaluation

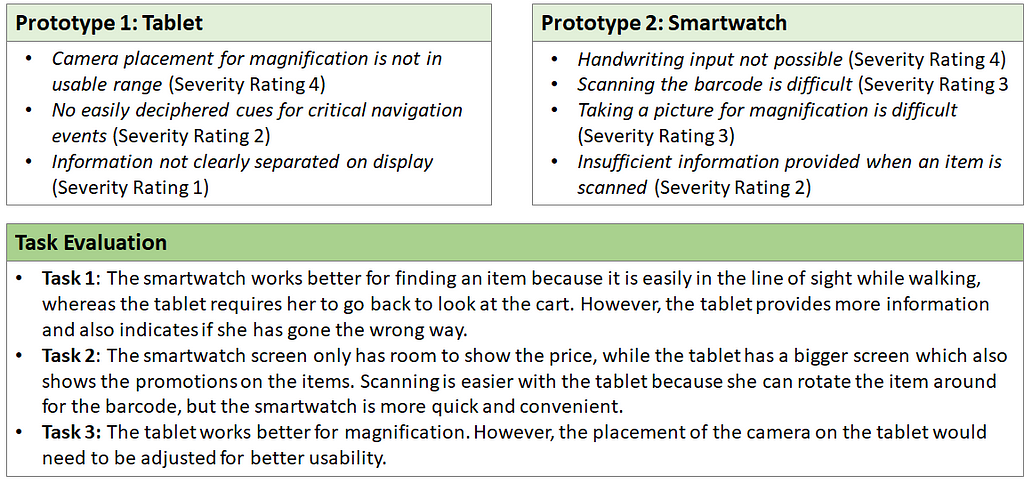

We met with Em and ask her to walk through each of the prototype with the 3 tasks. We then asked her to evaluate how well each task is supported, as well as which prototype she thinks works better for that task. At the end of the session, we asked Em to evaluate the prototypes against several usability metrics including learnability, efficiency, memorability, and error prevention. We then identified and rated the critical incidents found in each prototype.

Critical incidents found during the evaluation session and task evaluation results.

Critical incidents found during the evaluation session and task evaluation results.

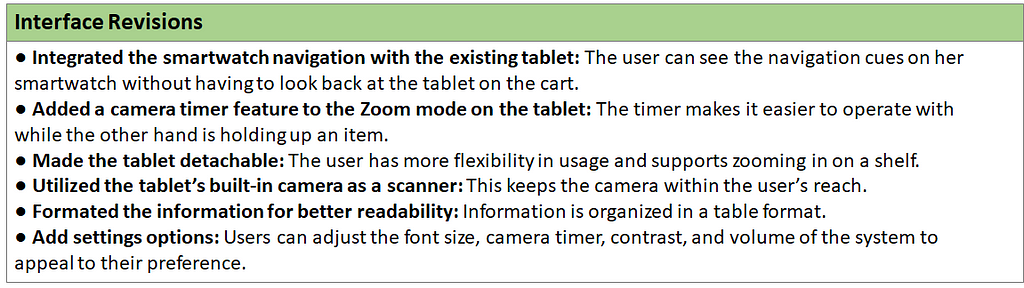

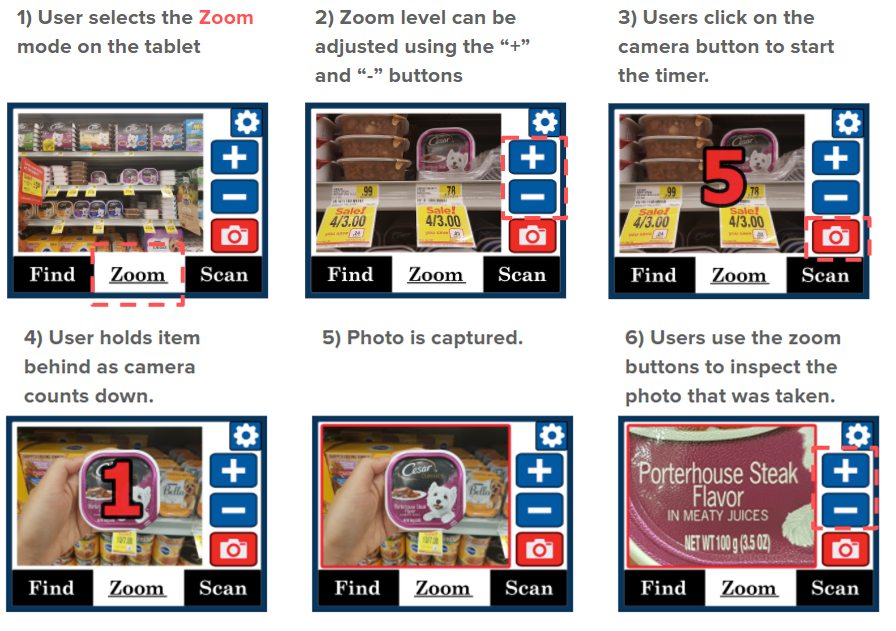

Neither of our two low fidelity prototypes completely met the user’s needs in completing the three tasks. The smartwatch had very limited screen space, but the tablet was not convenient. By using the tablet for stationary functions that require a lot of reading and the watch for interactions on the go, we were able to create a system that supports all three tasks in an intuitive way.

There are two main components to our high fidelity prototype — the tablet and the smart watch. There are 3 tabs on the tablet to switch between modes. We created storyboard to show how the two components work together to support each task.

Task 1: Find an item

Storyboard for Fnding an item.

Storyboard for Fnding an item.

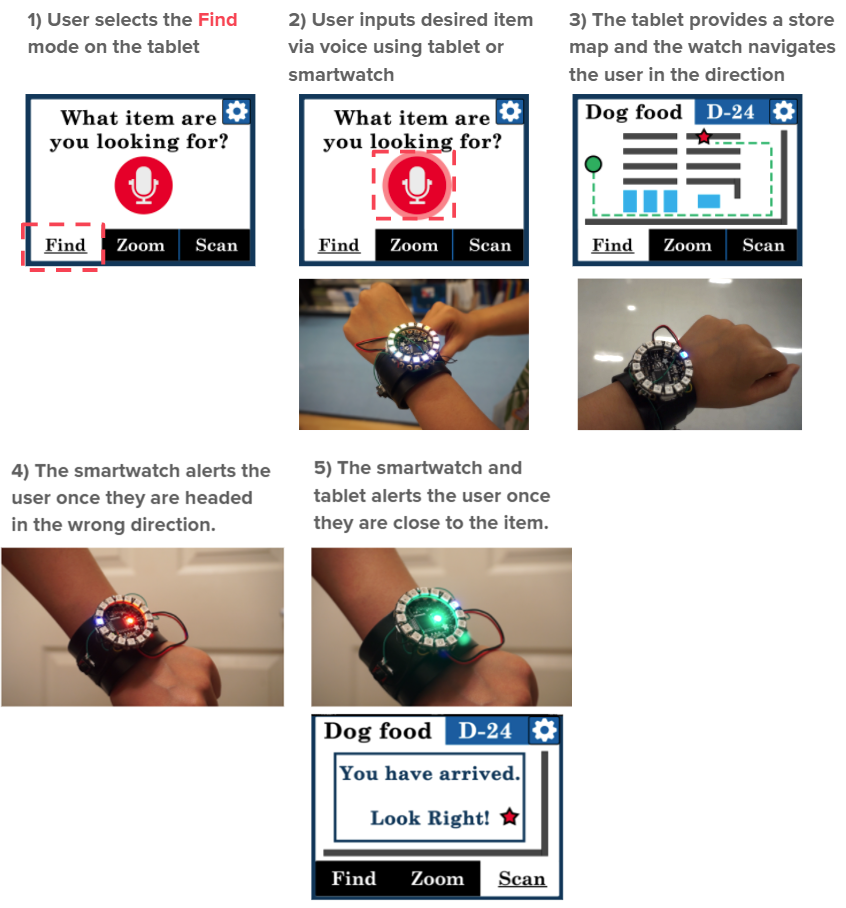

Task 2: Magnify an item

Storyboard for Magnifying an item.

Storyboard for Magnifying an item.

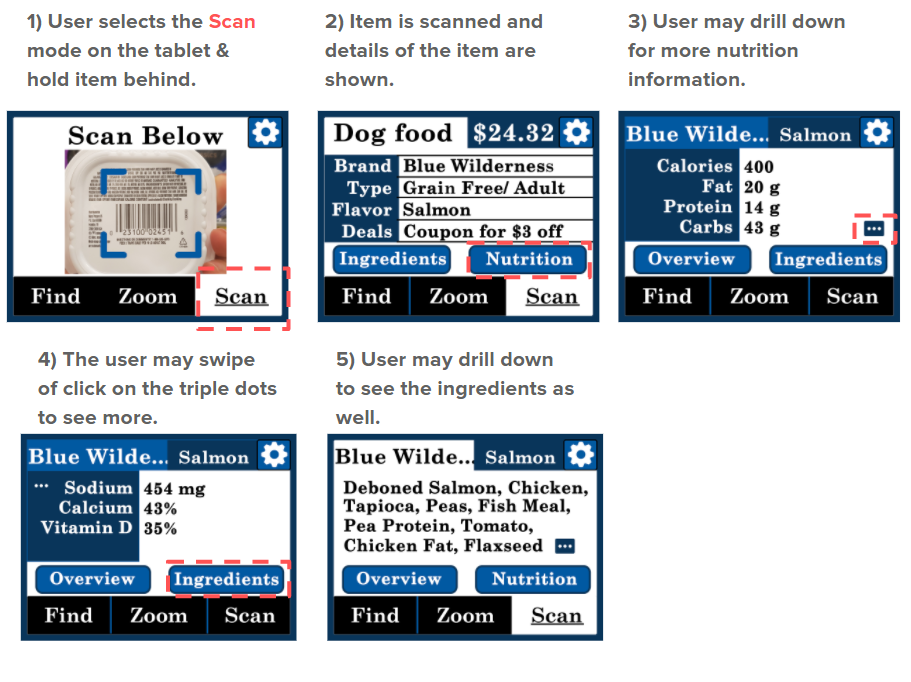

Task 3: Scan an item

Prototyping Tools

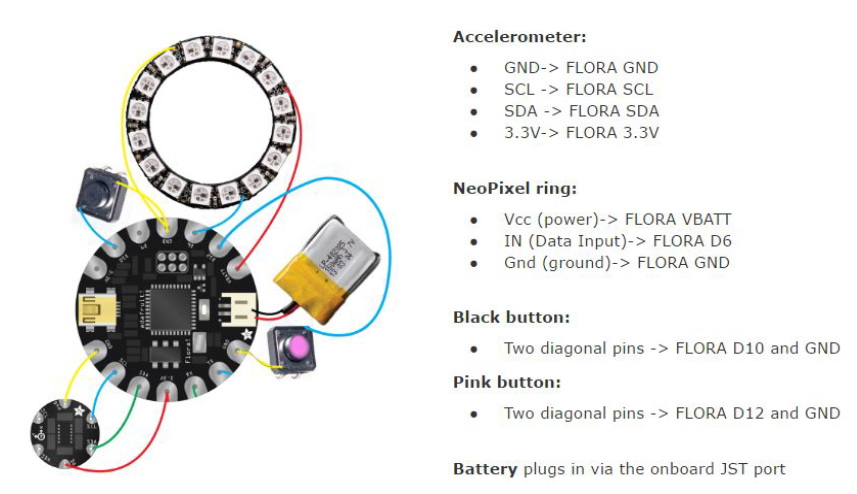

The tablet prototype has been created using the InVision while the smartwatch has been created using the AdaFruit Flora microcontroller and programmed with the Arduino IDE using C language. The prototype utilizes a NeoPixel Ring (16 RGB LED), an accelerometer and compass sensor (LSM303), and is powered by a 3.7V lithium ion polymer battery.

Smart Watch Prototype Circuit Diagram.

Smart Watch Prototype Circuit Diagram.

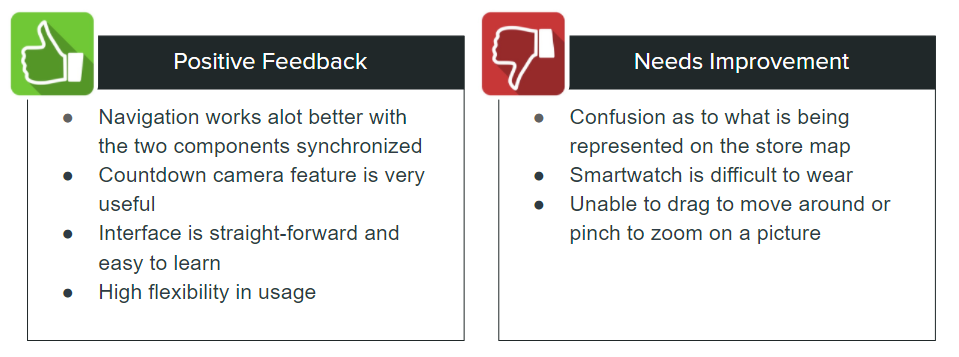

Evaluation

In this session, we started by providing a review of the three representative tasks and asked our participant to independently explore the prototype to measure its learnability. We then demonstrated the Smartwatch controls and conducted user testing. We ended our session with an open discussion on how well the final prototype supported the three tasks and how they could be improved.

Overall, Em found our interface to be very straightforward and easy to learn. She was able to understand the most of the tablet component without requiring the researcher’s explanation. As for the smartwatch, she was able to correctly decipher what the LED lights represent. She likes the addition of the settings options because it allowed for more flexibility in usage.

This has definitely been one of the most fun and challenging projects I’ve worked on. It is my first time prototyping with a hardware device (soldering is an ART!). Despite numerous difficulties, I am very happy with what we achieved and the friendship that formed during the course of this project.

Here are some key lessons that I learned:

If you like this article, please feel free to give me some applause *clap clap*.

Thank you Em for being such a great participant and Professor Leah Findlater for supervising our project. Thanks Lisa for all your hard work.

Please feel free to reach out to me if you want to learn more about our project.

Find me on LinkedIn, or stop by my Portfolio to see my other works!

Inclusive Design: Enhancing grocery shopping experience for users with low-vision was originally published in UX Planet on Medium, where people are continuing the conversation by highlighting and responding to this story.

AI-driven updates, curated by humans and hand-edited for the Prototypr community