Build Design Systems With Penpot Components

Penpot's new component system for building scalable design systems, emphasizing designer-developer collaboration.

uxdesign.cc – User Experience Design — Medium | Sandeep Jagtap

Prompt: “Imagine a mobile app that enables you to impulsively identify and purchase a garment or accessory that you see in real life. Design an end-to-end flow covering the experience from the moment of awareness to purchase completion.”

Time: This challenge took about 24 hours.

Tools: Pen and paper, Balsamiq, Sketch.

Constraint: Available technology :smartphone with camera

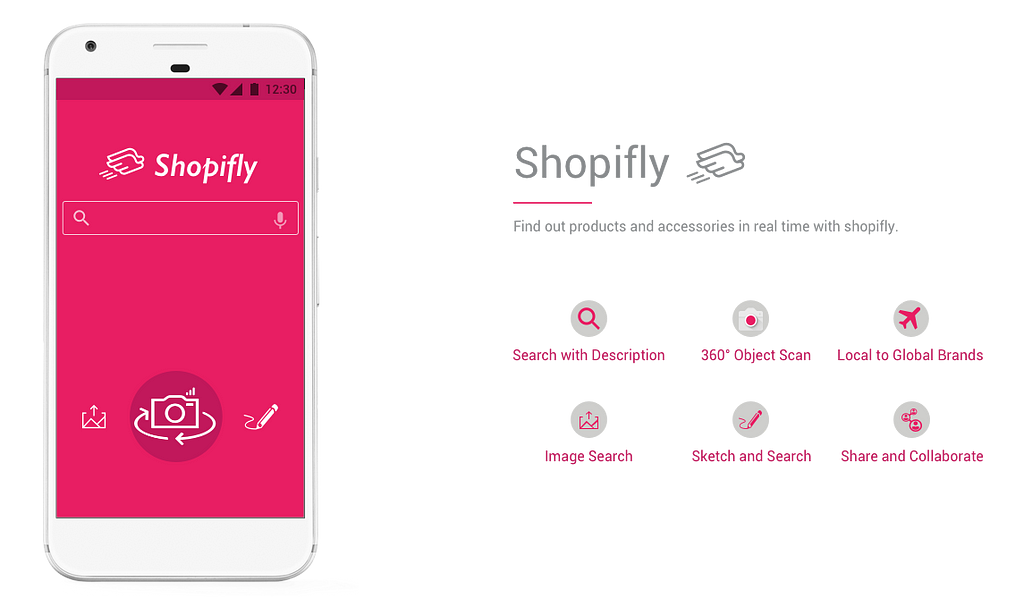

Final Design

Final Design

Result: A mobile experience concept that lets you buy garment or accessories with help of existing technology and possible extension of 360° camera

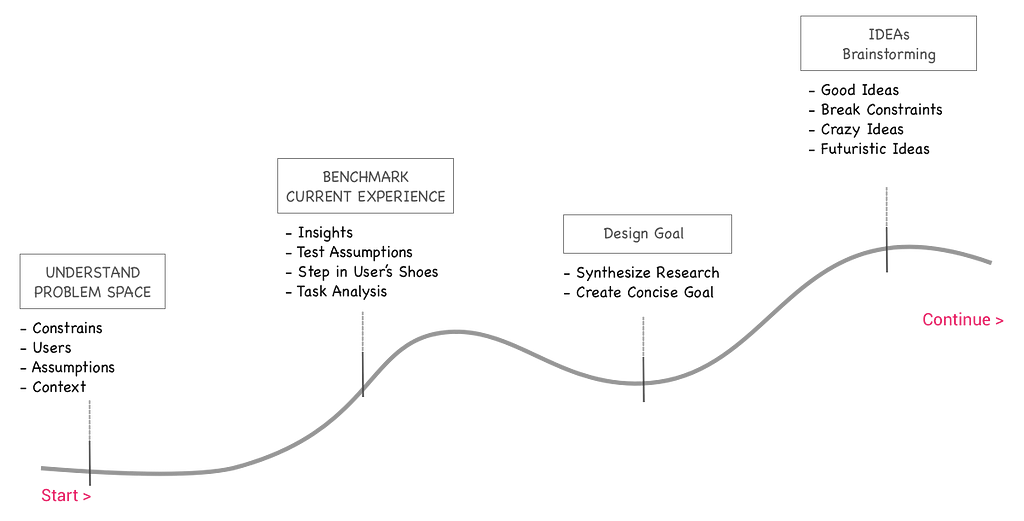

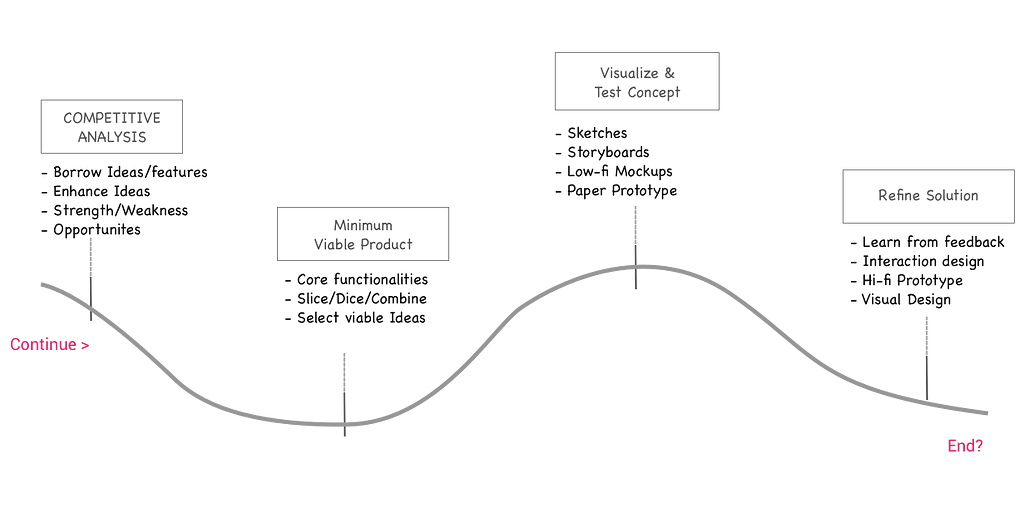

As in all design process understanding the problem space is a most important step. Understanding and imposing key constraints, visualizing user in context and validating assumption influenced my view of problem space. The aim was to come up with a concise design goal for brainstorming.

The overall design process took around 15–20 hours. The most of the time was spent on defining problem space and brainstorming phase.

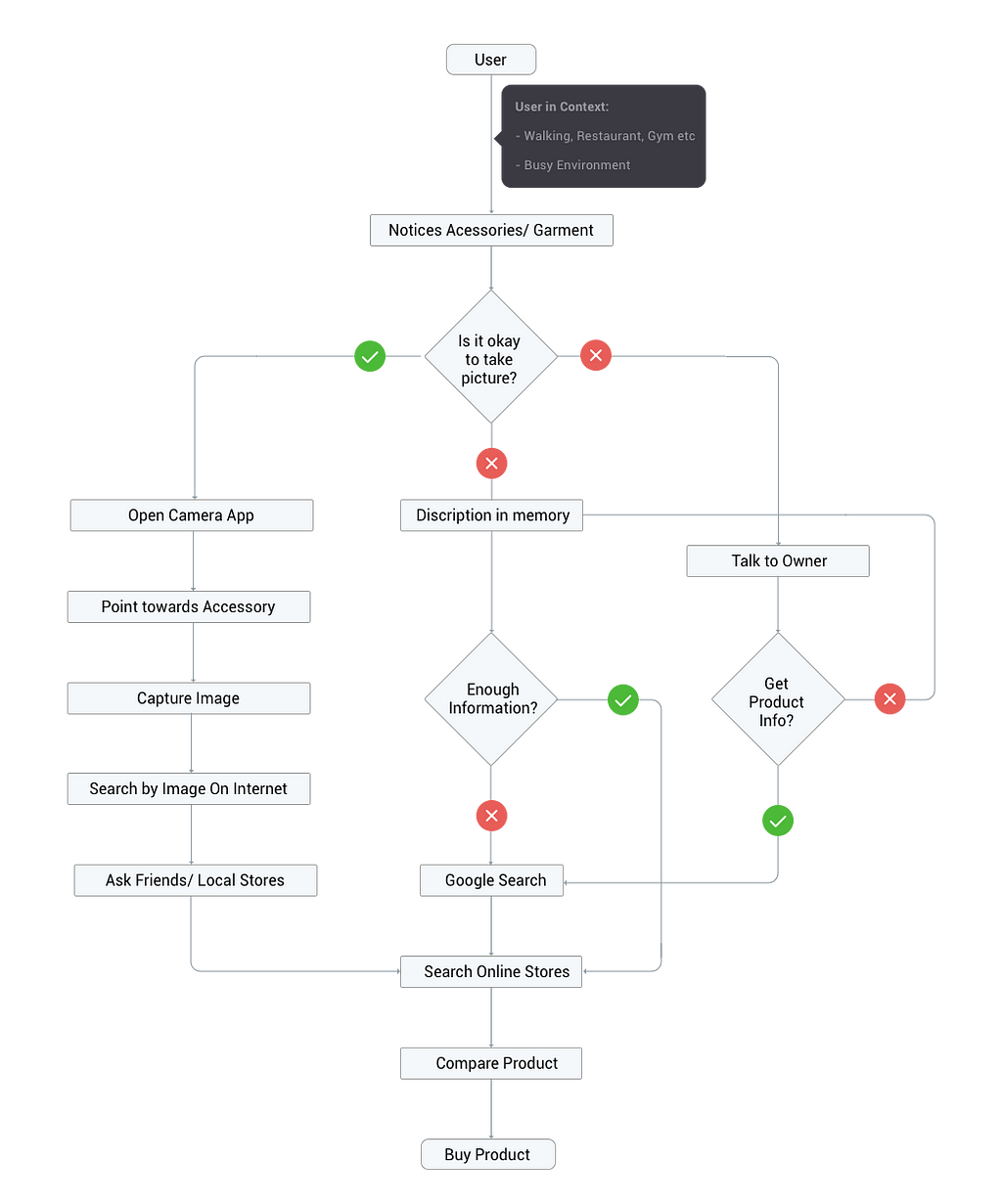

My research process began by mapping out current user experience. I wanted to observer user in context. So I asked my friend who loves shopping (who doesn’t?) to do a role play. I asked her to take walk with me in downtown Indy. After a brief walk, I pointed her towards girl wearing a nice pair of shoes and asked her what would she do if she wanted to buy those exact shoes right now. Her response was insightful. She said —

“If the person is approachable, I would directly ask her about the shoes else I will snap a picture of it, but sometimes it is difficult to get good picture so I generally google”

I came up with following flow chart for the actions she described.

Current User Experience

Current User Experience

I also gather bunch of other insights during research

After analyzing user research insights and constraints, I created a concise goal for brainstorming design solutions. The goal captures the high-level objective of the user in specific context with imposed constraints.

TO design a mobile experience IN a constantly changing environment WHERE user notices things on impulse or accidentally, have limited time to observe accessories or garment and is subjected to respect privacy

IN ORDER to buy them with the help description in the form of image or text

Brainstorming Ideas

Brainstorming Ideas

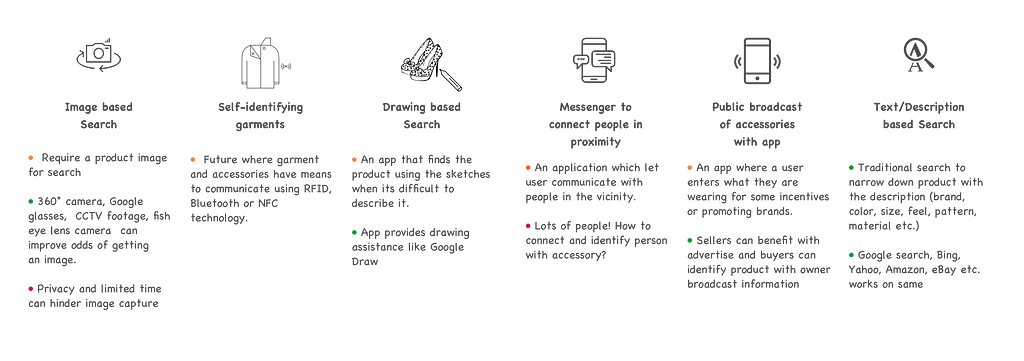

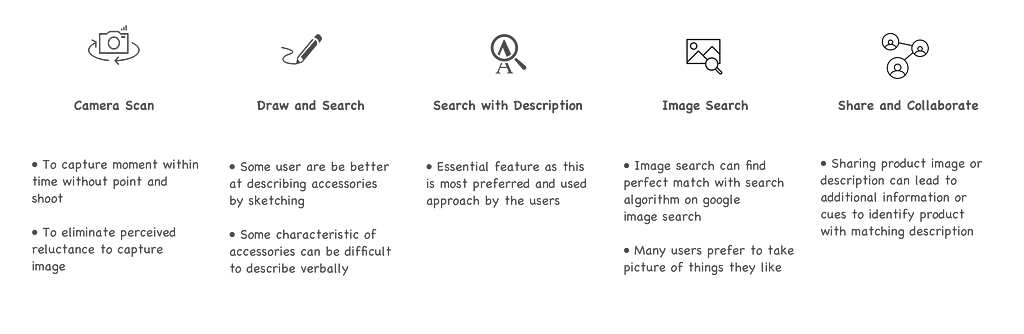

I started brainstorming possible design solution using design goal as torchlight. Often I tried to break the constraints to see beyond possibilities. Some solutions were pretty obvious like image search and text search. Sketch-based search and camera search were somewhat innovative. Self-identifying garments and accessories solution was a crazy idea.

Fina Idea!!

Fina Idea!!

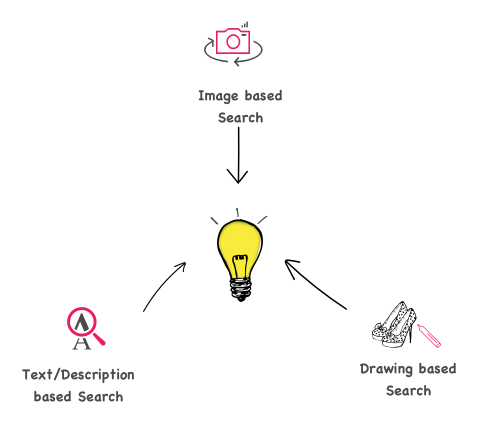

Based on the viability, the solution with image and description based search were picked for further exploration. The image search was strengthened with AI sketch and advanced camera technology (fish eye lens/spherical camera) to capture 360° view. The final solution was a mobile application as imposed by the constraint in design prompt. If not for the technology constraint, I would have definitely ventured into the realm of augmented reality using Google glass. Also, I worked under the assumption that image and text-based search is good enough to recognize and match product listing from local and global stores.

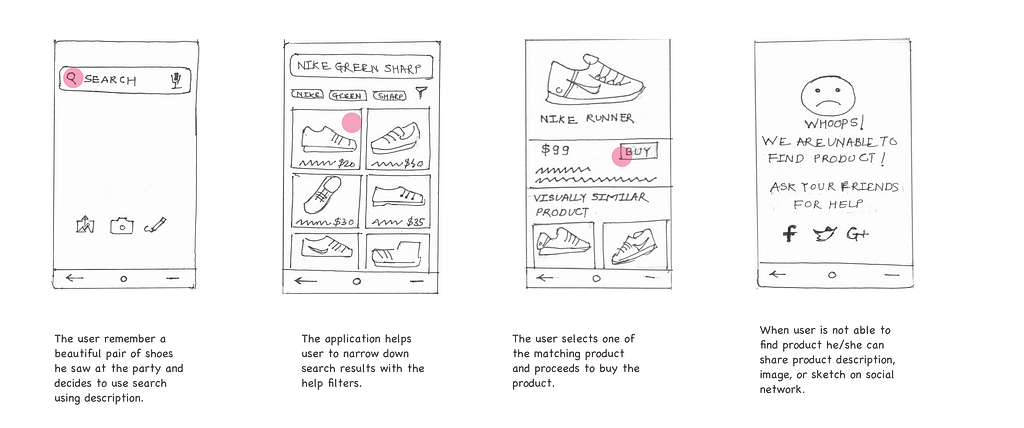

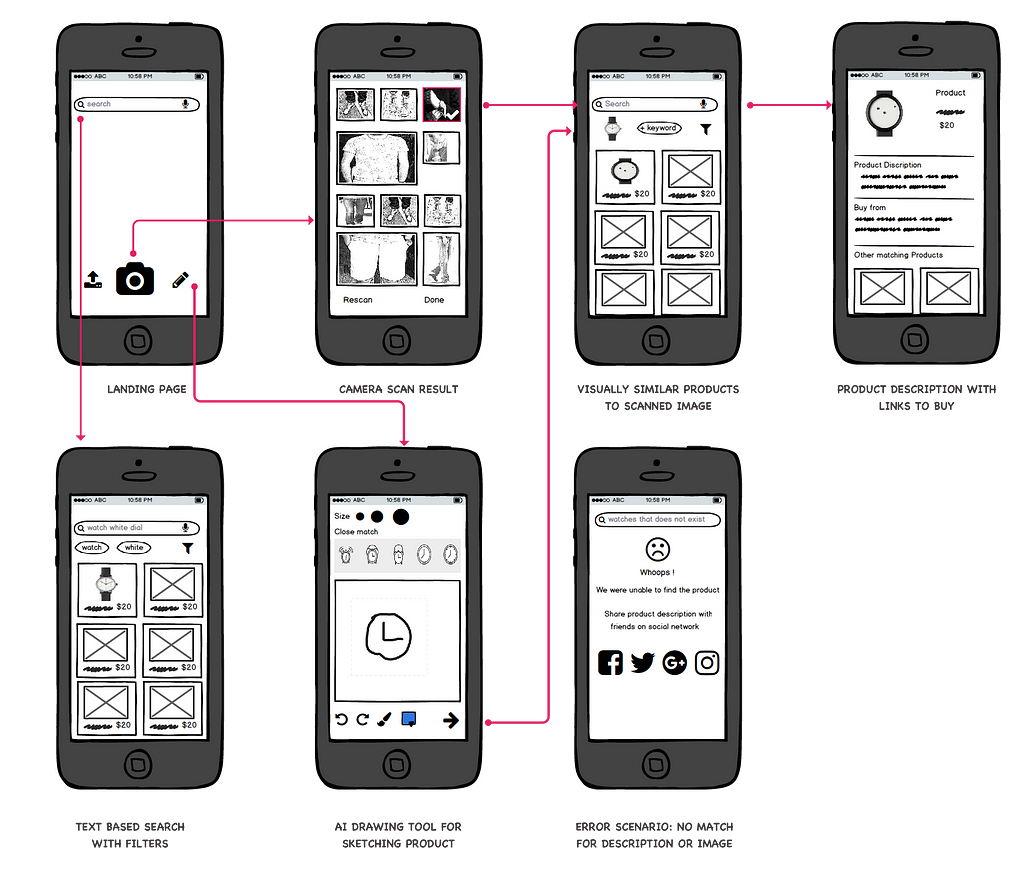

To design the individual interaction, I looked at couple scenarios based on user’s context.

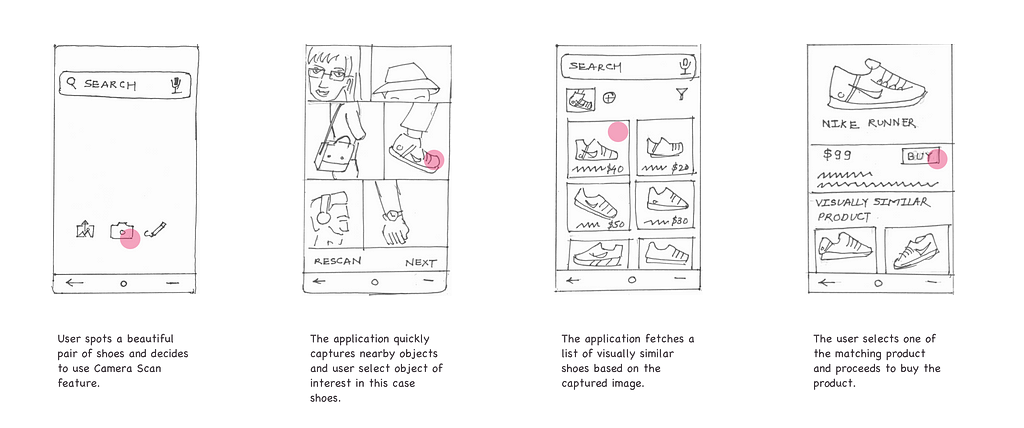

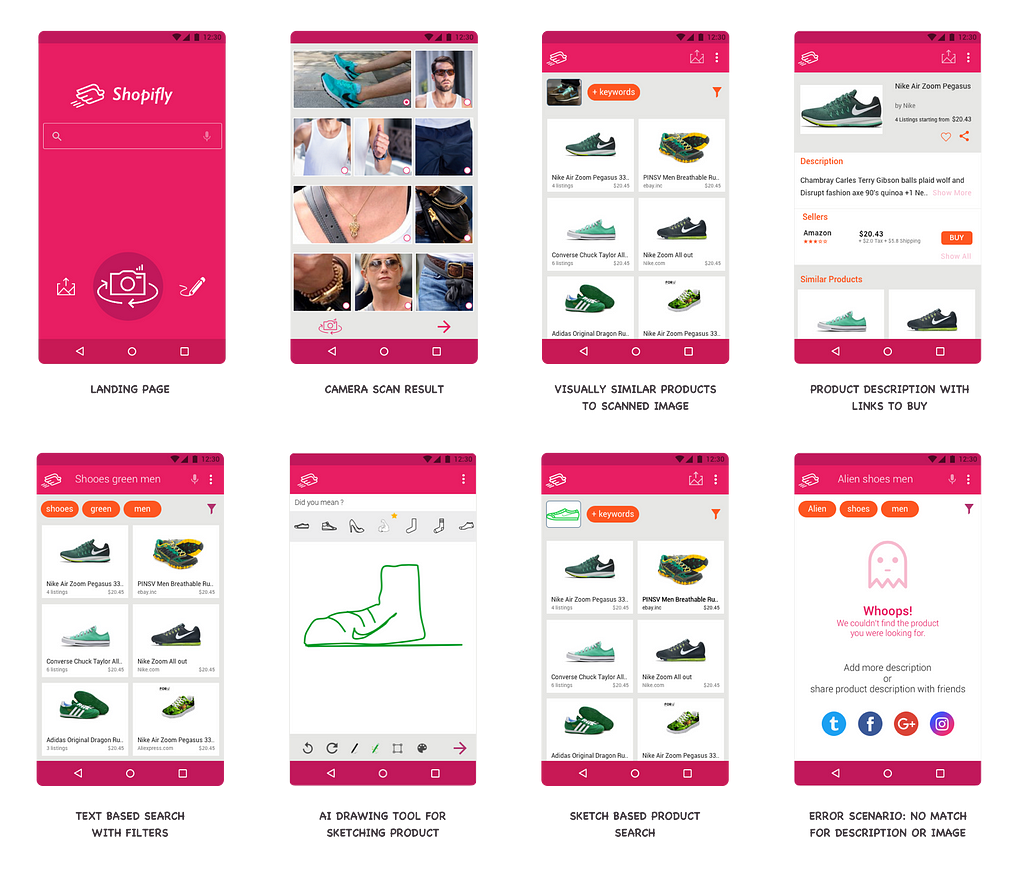

Scenario 1: (Camera Search) Kristina the shy notices a girl with a beautiful pair of shoes while returning from office at Time Squares, New York. She opens the Shopifly app and presses the camera button. Shopifly captures a picture of surrounding in all possible angles within seconds. Kristina did this without stopping or pointing the camera at shoes. Upon selecting a matching captured image, Kristina found the matching shoes in the search results.

User buys a product using camera scan feature.

User buys a product using camera scan feature.

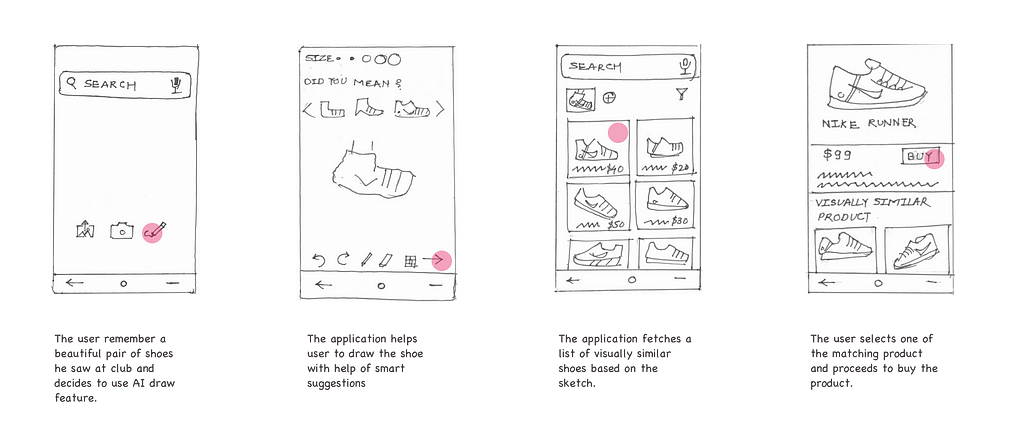

Scenario 2: (Sketch Search) Jack the artist notices a pair of beautiful shoes in the gym. He could not take the picture but he remembered the Nike logo and style of shoes. He opens the Shopifly app and starts doodling the shoe. The built-in AI helps jack to sketch shoes. Upon sketching Shopifly suggest the product based on the sketch. Sketch along with description Jack narrow down on the shoes he saw at the gym.

User buys a product using product sketch.

User buys a product using product sketch.

Scenario 3: (Text or Voice Search) Meridian the sociable also notices a great pair of sports shoes during the morning walk. She talks to the person and finds out brand and shoe type. She searches the shoes in Shopifly. The search result leads to a number of similar shoes but none of them look familiar to what she saw in morning walk. She decides to ask help from her friends on social media by sharing shoe description.

User buys a product using product description.

User buys a product using product description.

Low-Fidelity Mock-ups

Low-Fidelity Mock-ups

Color Pallett

Color Pallett

I wanted to invoke friendly and playful feeling in users hence choose pink as a dominant and orange as an accent color. As a general rule of thumb, I used gray and white as neutral background color. I made use of Google Color Tool to define overall color pallet. It is a great tool for defining color pallet for android applications.

Hi-Fidelity Mock-ups

Hi-Fidelity Mock-ups

The camera scan, sketch search, description search, image search and social media features were highlighted and emphasized respectively. The camera scan was given highest emphasis as it satisfied the key need of identifying accessory on impulse. Other features were more offline and came handy in the later part of the user journey.

The design challenge was a good learning experience. Especially designing with limited time and technology constraint was thought-provoking. It required me to think creatively with pace. Also, I learned about doing just enough research to get the project going. These scenarios are very much similar to corporate project where you are often limited by resources.

Thank you for your time!

Please visit www.sandeepjagtap.com to view my portfolio and resume.

Shopifly was originally published in uxdesign.cc on Medium, where people are continuing the conversation by highlighting and responding to this story.

AI-driven updates, curated by humans and hand-edited for the Prototypr community