Build Design Systems With Penpot Components

Penpot's new component system for building scalable design systems, emphasizing designer-developer collaboration.

uxdesign.cc – User Experience Design — Medium | Chris Noessel

Given recent events, it looks like it’s time in the grand evolutionary arc of technology to establish this as a pattern.

…You are creating a Natural User Interface for an AI, and you have chosen to embody your technology anthropomorphically, that is, having human form or attributes, but you are unsure…

Just how human should it really be?

At Google’s I/O developer conference in 2018, Alphabet’s Google CEO Sundar Pichai demonstrated the amazing Duplex feature of the Google Assistant, still in development. It was all over the internet in short order, but in case you didn’t see it: In the demo, he asks his Google Assistant to schedule a haircut for him, and “behind the scenes” (though we get to see it in action in this demo) the Assistant spins off an agent that calls the salon in a voice that is amazingly human-sounding. Give it a listen in the video below.

Later in the demo he has the Assistant (with a male voice this time) contact a restaurant to make reservations. There’s a lot to discuss in these scenarios, but for this pattern we’re focusing on its human-sounding-ness. The voice was programmed with very human like intonation, use of filler words like “um,” some upspeak, and very human sense of idiosyncratic pacing. It’s uncanny. Which takes us, as such things often do, to Masahiro Mori.

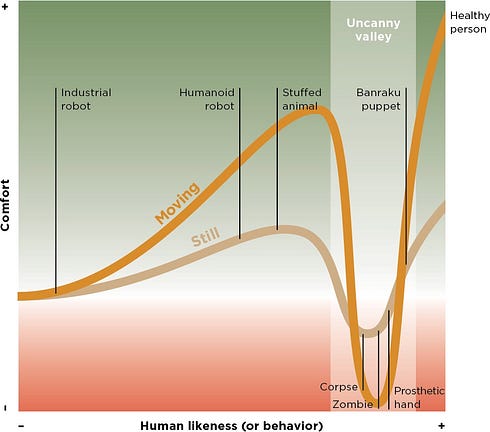

As part of his robotics work in the 1970s, Mori conducted research about human-ness in representation, asking subjects to rate their feelings about images with varying degrees of human-like appearance. On one end of the spectrum you have something like an industrial robot (not human at all), in the middle a humanoid robot (somewhat human), and on the right a healthy person (you know, human).

I wrote about the Valley in Make It So: Interaction Design Lessons from Science Fiction (Rosenfeld Media, 2012), and redrew his famous chart, reprinted above.

I wrote about the Valley in Make It So: Interaction Design Lessons from Science Fiction (Rosenfeld Media, 2012), and redrew his famous chart, reprinted above.

What Mori found is what’s now called the uncanny valley. Generally, it shows that as representations of people become more real, they register more positively to observers (to a point, keep reading below).

Moon (2009)

Moon (2009)

But if a representation gets too close, yet not fully human, it registers to the observer like a human with some weird problem, like being sick or hiding something; leaving the observer feeling uneasy or suspicious. (Mori reported that effect was stronger in moving images than in still images, but this assertion from his research has proven difficult to recreate.) Representations in the uncanny valley trigger an unease in the observer, and to Mori’s point, should be avoided when designing anthropomorphic systems.

There are sound academic questions about the uncanny valley, even whether it actually exists, but for sure, the notion has passed into the public consciousness. On a gut check level it feels correct. Things in the uncanny valley feel wrong. We should avoid it.

Duplex, though, has passed through the valley. At least in the playback demo it sounds nearly indistinguishable from human. It’s not perfect (hear the slight disjoint each time it says “12 P.M.”) but if you weren’t primed to suspect anything, you’d be hard pressed to identify it as a computer generated voice. To the schedulers talking with it, Duplex passed the Turing Test. It quickly caused a lot of reactions, as it posed significant new UX risks.

The first problem is the sense of unease we feel on behalf of the salon and restaurant schedulers. Well respected tech critics called it everything from “horrifying” to “morally wrong” to “disgusting.”

Google Assistant making calls pretending to be human not only without disclosing that it’s a bot, but adding “ummm” and “aaah” to deceive the human on the other end with the room cheering it… horrifying. Silicon Valley is ethically lost, rudderless and has not learned a thing.

— @zeynep

I am genuinely bothered and disturbed at how morally wrong it is for the Google Assistant voice to act like a human and deceive other humans on the other line of a phone call, using upspeek and other quirks of language. “Hi um, do you have anything available on uh May 3?”

Why the furor? There isn’t any “real” damage being done, right? The interaction is benign. If you swapped it with a human who said the exact same things in the exact same way, nothing would be worse in the world. No one was harmed.

It’s in the deeper sense that it doesn’t matter that the schedulers didn’t know. What matters is the deception we feel on their behalf. The unease we feel at the thought of being unsure ourselves. If the schedulers were told at the end of the each call that they’d been talking to software, they might have felt that squick that we feel, too. Shouldn’t I have been told?

Functionally, it’s useful to know when you’re talking with software, because until we get to General AI, software will have different capabilities than humans. The pre-recorded Duplex demos work seamlessly, but you can bet this wasn’t the first release. There were probably hundreds or thousands of calls not shown where it didn’t go as well, where it missed some obvious word or failed to follow a turn in the conversation. Imagine discovering midway through a conversation that the person on the other end is a thing that can’t understand your accent, or the word you’re using, or the question you just asked. Not only would you feel deceived, but the brand behind the AI will be damaged. Not to mention public acceptance of this kind of tech in general.

Prohibitions against deception are a human universal, and technology does not get a pass just because it is impressive.

Electric light and motion pictures felt uncanny to those first experiencing them, too. Is it just a matter of adjusting to the new normal? That might be the case if all such interactions were benign. But of course they won’t be, and that’s the bigger concern.

What if you get a call from someone claiming to be your company’s IT department, and they need you to visit a URL and login for a security upgrade? You’d be trapped in the weird circumstance of possibly offending someone by saying you’re not sure if they’re real or not, and to ask them to somehow prove it. If the stakes were low enough, I’ll bet most people would rather bypass that social awkwardness and give the caller what they want. Digital spoofing is a numbers game, and malefactors would just need to find the sweet spot where the stakes were high enough to provide some profit, and low enough that some percentage of people would fall for it, and then repeat the spoof as often as they could. And that’s just for an unfamiliar voice. What about the voice of a friend or family?

What about your own voice? That wouldn’t be all bad. It might be a great boon to people who have suffered the loss of their own voice and would like to reclaim it. Services like Lyrebird, Voysis, and VivoText can take voice samples from people and have that voice “say” whatever you type.

But then when you remember that the phone service and voice-activated systems digitize your voice to make use of it, you can understand the scope of the potential problem. Could your voice samples or voice profile get Equifaxed?

The social hacking that could be accomplished is mind-boggling. For this reason, I expect that having human-sounding narrow AI will be illegal someday. The Duplex demo is a moment of cultural clarity, where it first dawned on us that we can do it, but with only a few exceptions, we shouldn’t.

We don’t want want to be deceived by perfect computer-generated replications of human-ness. (At least not until we get to General AI, but that’s a whole other conversation.) And Mori’s research tells us to stay away from the valley that makes us “feel revulsion.”

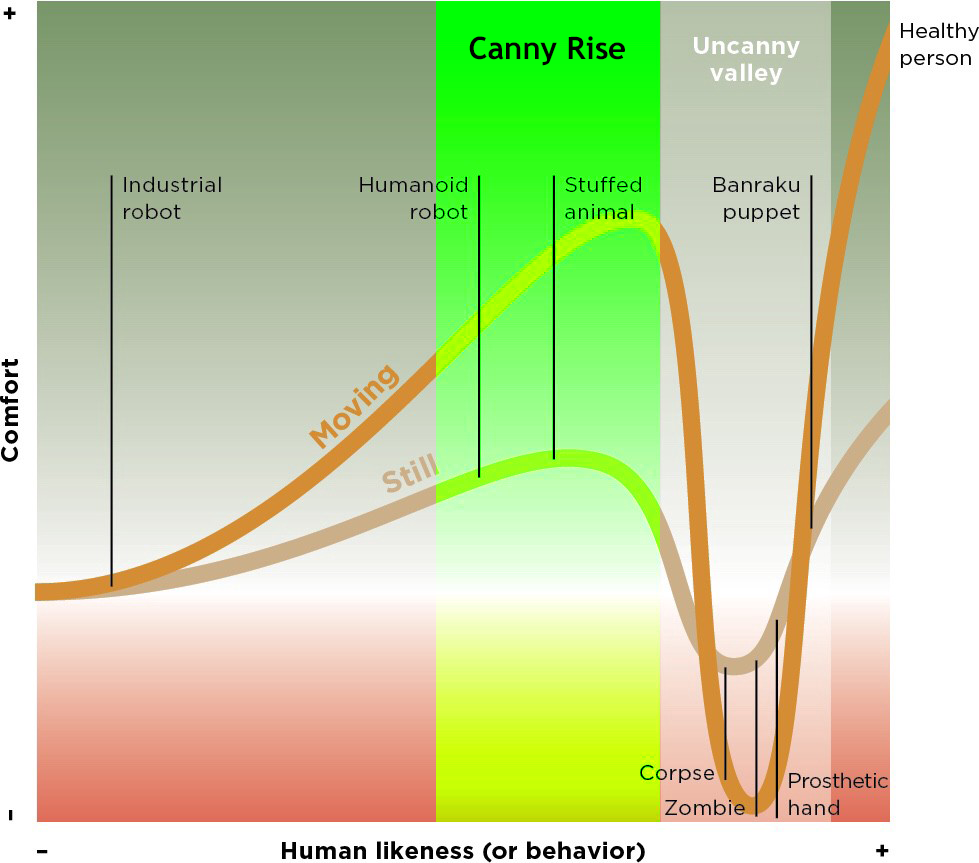

Picking from the best of what’s left, we have the positive slopes on either side of the valley. The slope right next to “healthy person” is steep and difficult to get right without causing feelings of deception, so a fraught choice. That leaves the slope to the left of the valley. It is gentler, so easier to get right, and a clearer signal of the the nature of the representation. An observer of this kind of anthropomorphism should feel quite confident in distinguishing whether or not what they’re seeing is human.

To give this a name, let’s call it the canny rise. It’s canny because the human interacting with it knows that it’s a thing rather than a person. It’s a rise because it contrasts the uncanny valley and the uncanny peak on the far right.

There are two ways to do this. The first is to simply say so. This disclosure allows the information to be read. The second is to design the voice with some deliberate non-humanness. This hot signal allows the information to be perceived. More on each of these follows.

If a NUI provider is trying to stay on the canny rise, and be upfront about its nature, there are two ways to go about keeping the listener. The first is disclosure, or telling the user that they are dealing with a bot. So, instead of saying, “Hi, I’m calling to book a woman’s haircut for a client,” it would first say something like, “Hi, I’m a software agent calling to book a woman’s haircut for a client.” This gives the information explicitly, upfront.

n.b., Google has already committed to disclosure in the wake of backlash about the Duplex demo.

Disclosure, though, is not enough. A listener might be distracted at the moment of disclosure and miss it, or—given the perfectly human-sounding voice—decide privately that they misheard what was said, and continue on without clarifying, just to be polite. Surely, she means an app-enabled concierge service or something.

So additional effort should be made to design the interface so that it is unmistakeable that it is synthetic. This way, even if the listener missed or misunderstood the disclosure, there is an ongoing signal that reinforces the idea. As designer Ben Sauer puts it, make it “Humane, not human.”

An example is the Lyrebird examples. They do sound like the politicians they were trained on, but the voices don’t simply sound like that person speaking. I presume that’s deliberate to prevent any false-flag media or fake memes. The voices have a layer of synthesis, as if the speaker were talking behind a metal screen or thick cheesecloth.

The exact design of the hot signal is a matter of branding and aesthetics. For voice there are lots of delicious antecedents. Another example from pop culture is this track from Imogen Heap, which relies on the heavy use of vocoder.

What’s important with both disclosure and a hot signal is to provide recourse. If the listener asks for an explanation, it should be available. If the listener has a negative response or asks expressly, the agent should offer some alternative, such as passing to a human agent, or even passing back to the “client.”

Patterns like this one are written for well-intentioned designers. But of course there will be malefactors. Every technology has its abusers. We can count on them to thwart any norms that are established, to perfecting their deceit. There’s even a fine argument to be had that white-hat norms will increase public complacency and a false sense of certainty in users when they hear an “obvious human.” What steps we can take as users to defend ourselves against these is food for another post, but shouldn’t be dismissed as trivial.

In general, take care to avoid both perfect replication of human-ness in your interface, and the uncanny valley where it comes across a human with something deeply wrong. Instead, provide your users with disclosure and design the interface with a hot signal, to help users confidently understand the nature of the thing they’re working with, and appropriately set expectations for its capabilities. Provide recourse to other means for users who are made uncomfortable.

***

Thanks to social media connections and real-world friends and family who helped me build out these ideas from a “hot take” to something more useful to all of us in the long term.

The Canny Rise: A UX Pattern was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.

AI-driven updates, curated by humans and hand-edited for the Prototypr community