Build Design Systems With Penpot Components

Penpot's new component system for building scalable design systems, emphasizing designer-developer collaboration.

UX Planet — Medium | Jacob Payne

This article follows UX 101 for Virtual and Mixed Reality — Part 1: Physicality

Designers working with virtual reality have to create experiences that work in a 3D environment. That environment can be entirely simulated (Virtual Reality) or overlaid onto our real one (Mixed Reality). As humans we navigate 3D environments constantly, and our senses allow us to do so expertly. Many aspects of VR rely on these same senses.

Creating intuitive virtual experiences requires considering the senses in the design process. Failing to do this these can make the experience seem unnatural or even disorienting for the user. Which in turn breaks the immersion and can put someone off of VR altogether. In this article I’ll break down the sensory factors VR designers are currently working to overcome.

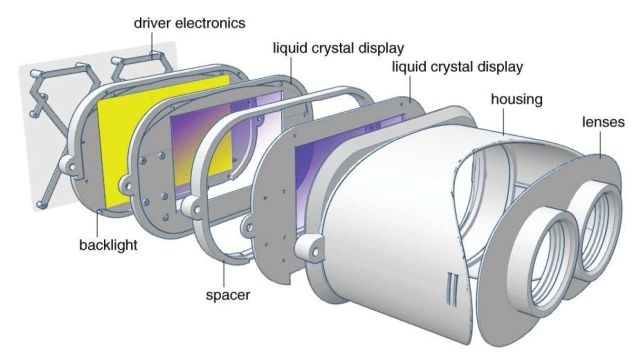

Let’s start with the most obvious sense, our vision. VR headsets use a mounted display screen viewed through a pair of lenses. The lenses change the focal distance of what is on screen. This allows your eyes to focus accordingly.

Schematic of a typical head mounted display unit. Courtesy of Stanford University.

Schematic of a typical head mounted display unit. Courtesy of Stanford University.

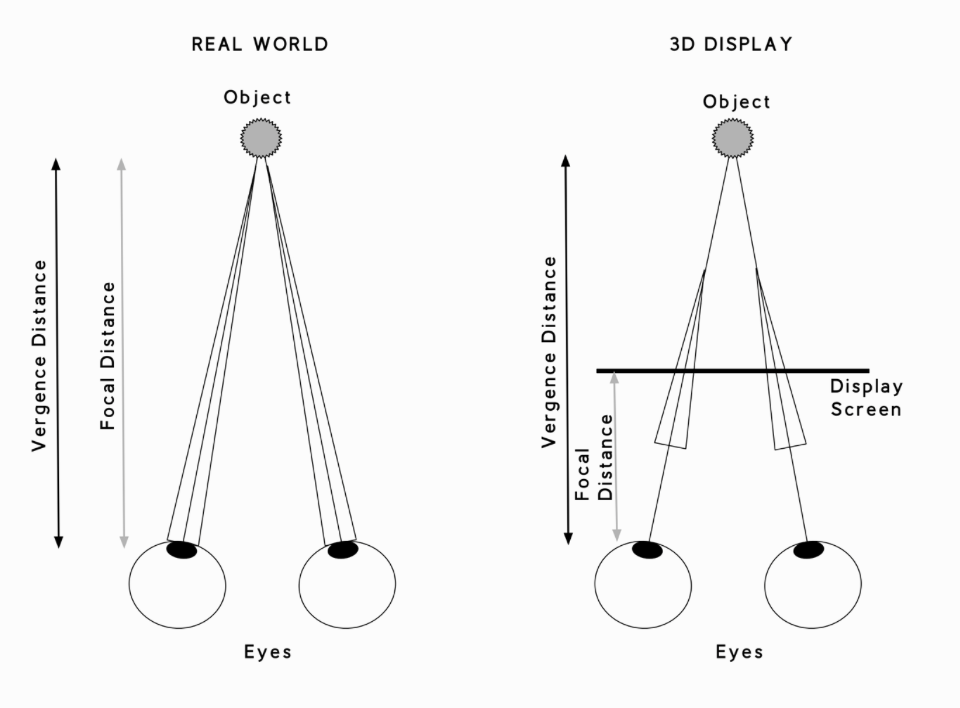

The unfortunate side-effect of this is that our eyes don’t adjust to the virtual distance but are actually still focusing on the nearby screen. This can lead to eye strain, or vergence-accommodation conflict. Some people are more prone to having problems with it than others, particularly those who have to wear glasses to help them focus in the first place.

There’s not a huge amount you can do to design around this, however it has become less and less of a problem as the headsets improve. But it’s something to keep in mind when designing for VR if your users will be using the headset for an extended period of time or have to change focus a lot.

Diagram from “Designing for Mixed Reality” by Kharis O’Connell

Diagram from “Designing for Mixed Reality” by Kharis O’Connell

Mixed Reality doesn’t have issues with vergence-accommodation because users are looking at the real world. But this comes with unique problems of its own. Cameras and sensors in an MR headset are used to build an image of the real environment. Virtual elements are then placed in reality, sometimes creating conflicts with a user’s natural focus.

MR designers have to think about how to make a virtual element behave in reality. Does it sit on a flat surface? Against a wall? Is it floating? Can it be moved? Should it have shadows that match the light source? Even with all of these factors considered it’s difficult to implement perfectly. Sometimes making it awkward to switch focus between a virtual element and reality.

Impressively MR headsets like the HoloLens already do a pretty good job handling all of this. But there’s still some way to go. To move elements in MR often requires very accurate gestures from the user. And anyone who has used the HoloLens extensively will know there is an obvious problem with lighting. In environments with a lot of light virtual objects can appear faint. I’ve seen companies use different opacities of film to cover the lenses for environments with heavy or varying light sources. It’s a shame designers have to resort to lo-fi hacks for such an advanced piece of technology. Instant lighting adjustment will hopefully be a requirement of future versions.

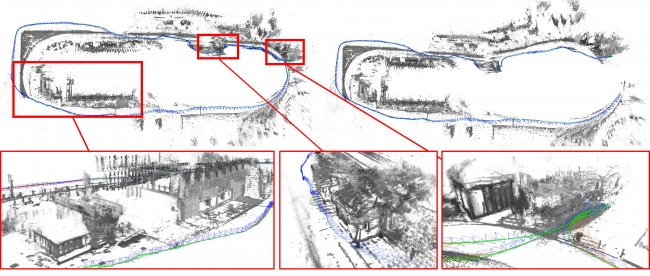

Computer Vision (CV for short) refers to the technology that enables computers to process visual information and understand 3D space. It has taken a lot of effort to get computer vision to the standard it is today and mixed reality is entirely dependant on it. There are different technological approaches to achieving accurate CV. Some that can scan 3–4 metres around a user and others, like those used by aerial drones, that can scan up to 300 metres.

Example of Computer Vision used to scan a large environment. Courtesy of the Technical University of Munich

Example of Computer Vision used to scan a large environment. Courtesy of the Technical University of Munich

Why is this relevant for designers? Knowing the limitations of the computer vision used by the headset will affect how you place virtual objects. Particularly when it comes to visual lag. When a user is moving around, virtual objects have to appear contextually in their environment. The MR headset will need to scan that environment, often ahead of the user, to project and move objects in a natural way. The quality of the headset’s CV will have a direct impact on how laggy the MR experience will turn out.

Leap motion’s hand tracking enabled by Computer Vision

Leap motion’s hand tracking enabled by Computer Vision

Computer vision is also what allowed Leap Motion to create their impressive hand tracking engine which creates virtual representations of the user’s hands just using the headset.

Many VR experiences now use virtual hands to help users interact with their environment but with most of the simulations you can try today it’s not possible to feel anything that you touch in VR. By using haptic technology we can close this gap between the real and simulated.

In short, haptic technology is a way of using touch to interact and get feedback. Chances are you’re already familiar with it. When you receive a text and your phone vibrates that’s actually a simple form of haptic feedback. Any gamers who had the infamous Rumble Pak for Nintendo64 will remember the feeling of firing an automatic weapon in GoldenEye 007.

Nintendo’s Rumble Pak. Cost a small fortune in AAA batteries and destroyed the wrists of an entire generation.

Nintendo’s Rumble Pak. Cost a small fortune in AAA batteries and destroyed the wrists of an entire generation.

Today in VR haptic means a lot more than a notification or a one-off effect. When we reach out to touch a virtual object and feel nothing in our hands the virtual remains just that, virtual. We are so used to using our sense of touch to interact with real objects that’s once we feel nothing from one in VR we have to rely entirely on our vision to know how to interact with it.

“As a visually-oriented species, we usually don’t stop to think how incredible our sense of touch really is. With our hands we can determine hardness, geometry, temperature, texture and weight only by handling something.”

— Virsabi, Copenhagen based VR startup

Many engineers are working on haptic devices to recreated these aspects of touch in VR. One of the most advanced examples being Dexmo , an electronic exoskeleton that can apply force, pressure and resistance to the user’s hands and fingers.

It looks like quite a big piece of hardware, because it is. For haptic feedback to come into mainstream use it will need to be much less intensive than a pair of robot gloves. We will no doubt see more lightweight haptic wearables in future, or maybe even no wearable tech at all. Ultrahaptics are a company using sound waves to create sensory touch feedback. Interestingly the applications of this aren’t solely unique to VR. With their technology haptic UI controls in the air can be used anywhere in the real world.

One pitfall a designer can easily fall into when designing for VR is working to an “ideal” user. We might assume they have perfect balance, are the average height, have perfect vision that doesn’t require thick glasses etc. generally these things are easy enough to overcome but there is one unique aspect in VR which needs a bit more thought — a user’s proprioception.

Proprioception is our physical sense of self in space. It’s what gives us a sense of our body without having to look at it, allowing us to instinctively understand where our limbs are. Think about how you can touch your nose with your eyes closed. Proprioception helps us do many things but some of us are better at it than others.

Google’s Tilt Brush allows users to draw in 3D

Google’s Tilt Brush allows users to draw in 3D

Timoni West highlights a good example when describing Google’s Tilt Brush, a VR tool for drawing in 3D — “People don’t have a perfect sense of their physical self, and VR amplifies this. It’s very hard to draw a straight line in 2D, but impossible in 3D; knowing where your arm really ends is also difficult.”

Leap Motion hand tracking perfectly in sync with a user.

Leap Motion hand tracking perfectly in sync with a user.

When VR uses virtual hands users can work with their natural sense of proprioception, making it far more intuitive. Of course if the virtual representation of where a user’s hands are is out of sync then this becomes disorientating. In short, heavy reliance on a user’s proprioception might make for a challenging virtual experience.

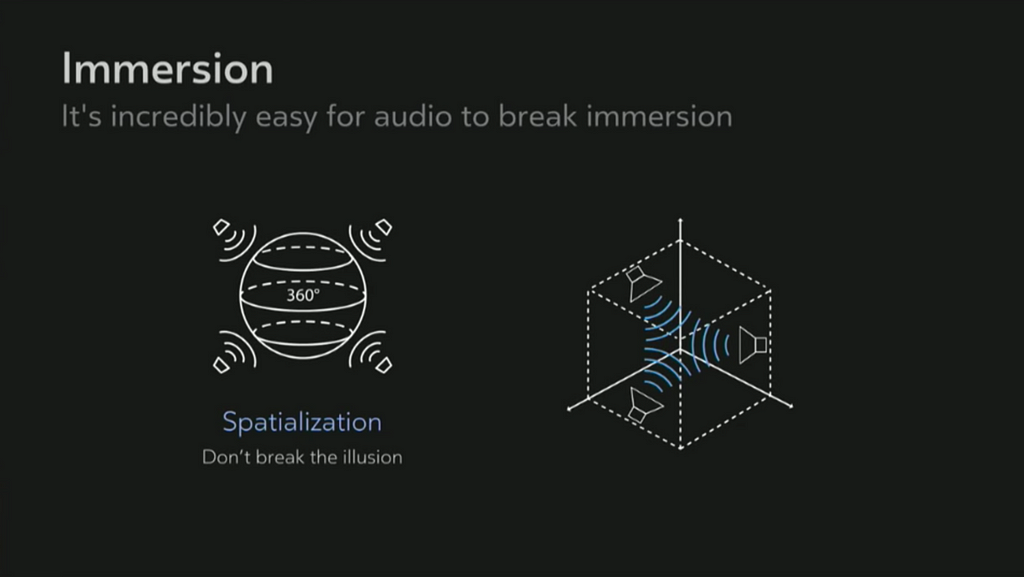

Sound design for VR is a whole new field in itself, using approaches that are new to even expert sound designers. I think the main thing to understand from a UX or UI designers perspective is that sound is vital to an immersive VR experience and useful for an effective mixed reality experience.

In VR sound is used to simulate an entirely new environment. Historically, for music, movies and video games, audio has been mixed without considering spatiality. For VR, sound designers have to create “3D audio” or holophonic sound. This is where the audio has been mixed to recreate the way sounds come to you from different directions and distances.

Think about how things sound different to your ears when they come from behind, or above, as opposed to in front of you. Then sounds that are further away or close to you. What about sound from something that is moving? This is quite a challenge when the user is only wearing a regular set of headphones with two speakers in a fixed position. Audio in VR needs to behave naturally so as not to break the immersion.

A slide from Tom Smurdon’s Oculus talk – 3D Audio: Designing Sounds for VR. Check it out for a for a detailed insight into how sound design for VR works.

A slide from Tom Smurdon’s Oculus talk – 3D Audio: Designing Sounds for VR. Check it out for a for a detailed insight into how sound design for VR works.

In mixed reality audio competes with the sounds of the real world. The kind of sounds you use will be dependant on the purpose of the interaction. If you were designing virtual UI to help with tasks in the real world you would likely take a similar approach to sound that you would for flat screen app. Using audio cues that sync with gestures. The Clear App is a great example of this.

Beyond that, remember to think three-dimensionally. Could you use sounds to indicate elements outside of the user’s field of view? If a user interacts with something in one place does it create sound somewhere else?

As stated at the beginning, the aim of understanding how to work with the senses is to create natural, and therefore intuitive, experiences. From this article it might feel like an overwhelming list of hurdles but it’s easier to tackle than you think. The truth is that once you start making stuff in VR it’s very much a trial and error process. The VR design process requires testing almost immediately. And as you test and play around, the sensory gaps that need filling will reveal themselves. Knowing the above should just help you spot them sooner.

The next articles in this series will cover different types of input and how to make UI work in three dimensions.

This article was built on work by people much smarter than myself. I’ve linked to their work throughout but if you want to learn more check out the following:

UX 101 for Virtual and Mixed Reality — Part 2: Working with the senses was originally published in UX Planet on Medium, where people are continuing the conversation by highlighting and responding to this story.

AI-driven updates, curated by humans and hand-edited for the Prototypr community