Build Design Systems With Penpot Components

Penpot's new component system for building scalable design systems, emphasizing designer-developer collaboration.

This article from Hazy Research highlights the significant amount of compute power that Artificial Intelligence (AI) systems require and discusses recent efforts aimed at reducing this demand while increasing efficiency.

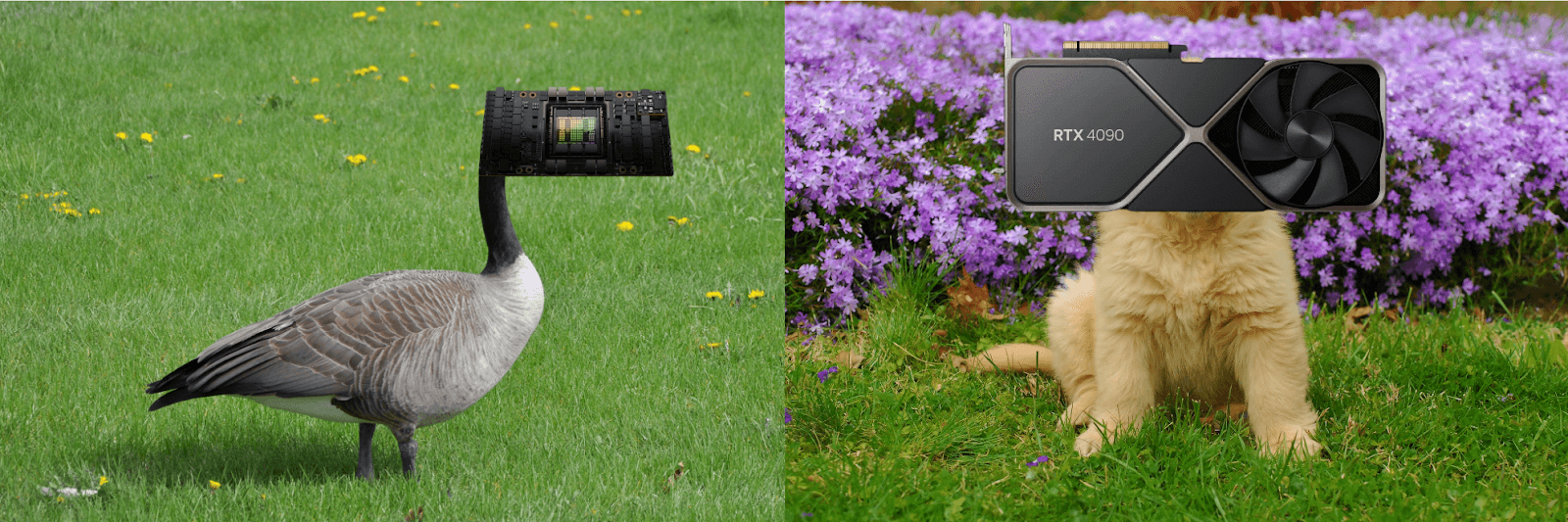

NVIDIA's new H100 GPU has immense compute power, but its full performance requires carefully managing various hardware components like tensor cores, shared memory, address generation, and occupancy.

ThunderKittens, an embedded DSL developed by Hazy Research, is introduced as a tool to accelerate the creation of high-speed kernels for AI applications.

The authors argue for reorienting AI models and system design around the constraints and capabilities of modern accelerator hardware.

AI-driven updates, curated by humans and hand-edited for the Prototypr community