Build Design Systems With Penpot Components

Penpot's new component system for building scalable design systems, emphasizing designer-developer collaboration.

Ever wonder how tools like GitHub Copilot work? Generative AI technology, utilized for things like code, text, and image generation, often appears as a mystical black box to many users. This article demystifies the inner workings of such AI systems, particularly focusing on Code AI completion in the context of Cody, a code AI assistant.

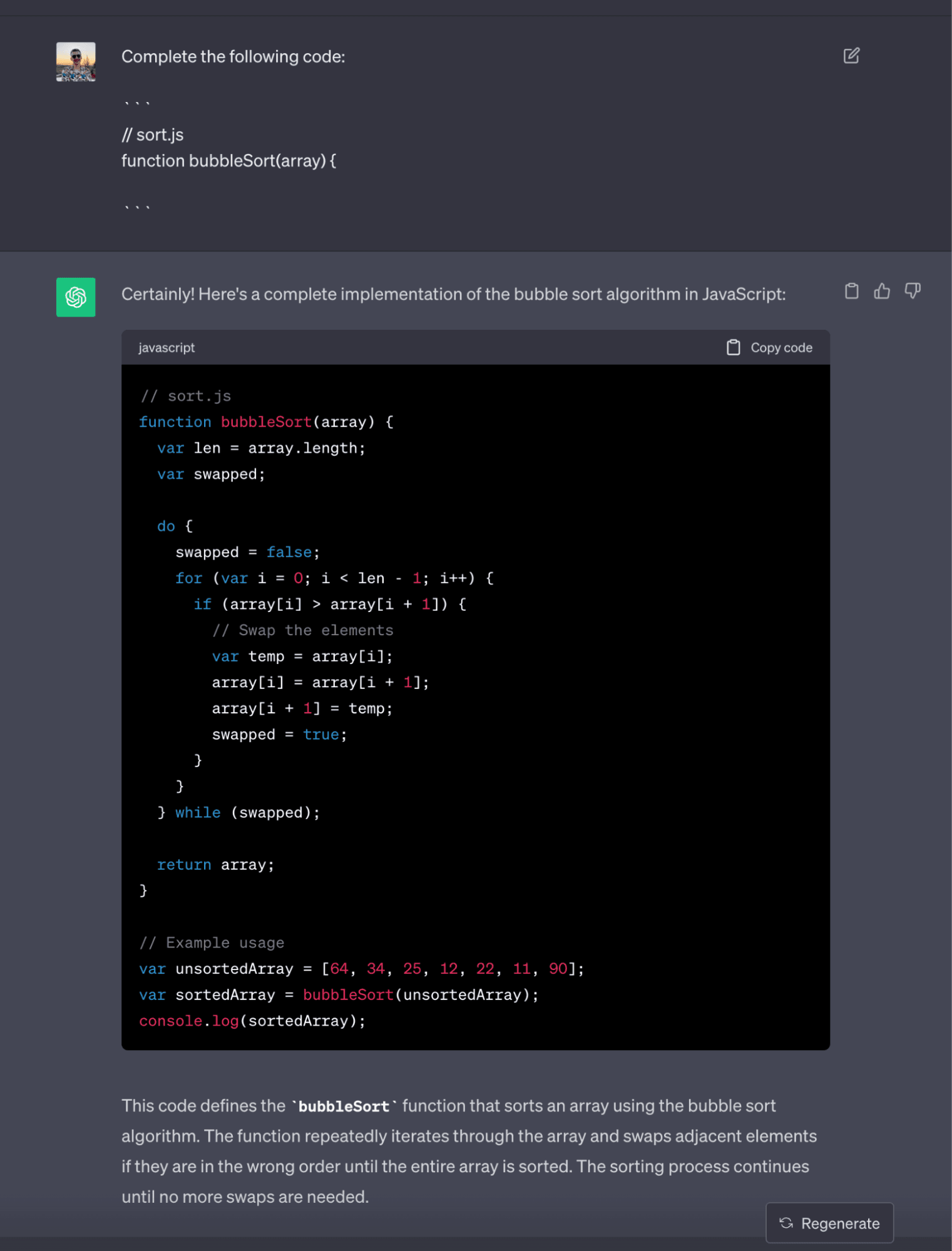

Code completions involve leveraging an LLM (Large Language Model) to provide suggestions based on the current code.

Cody (Sourcegraph's AI assistant) follows a 4 step process for providing code completions: Planning, Retrieval, Generation, and Post-processing.

Cody utilizes techniques like syntactic analysis, intelligent prompt engineering, and LLM selection to optimize code completion quality and acceptance rate.

Cody has achieved a completion acceptance rate as high as 30% among users.

In its minimal form, a code autocomplete request takes the current code inside the editor and asks an LLM to complete it.

The role of context and various processing steps are crucial components in the implementation of an efficient code AI system.

Implementing a code AI system that serves many different use cases, coding languages, workflows, and ensures developer satisfaction is a complex endeavor.

Building a useful code AI assistant like Cody requires a lot of work. Sourcegraph had to find relevant code examples and design good prompts for the AI model. They also experiment with different AI models and improved the AI's suggestions through post-processing. Continuous updates with extensive testing are necessary to make an AI assistant that developers really accept and use naturally while coding.

AI-driven updates, curated by humans and hand-edited for the Prototypr community